- AppSheet

- AppSheet Forum

- AppSheet Q&A

- Re: Questions about Machine Learning in Appsheet P...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello!

May I ask some questions regarding Machine Learning in the Appsheet Prediction:

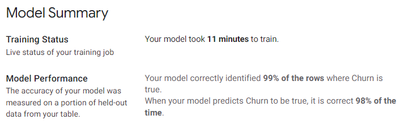

1) For the result from Classification (Target col = Yes/No), I have the following result:

a) Is the 1st statement (i.e. Your model correctly identified 99% of the rows where Churn is true.) is actually “Recall” value in Machine Learning? Or is it actually “Accuracy”?

b) How about the 2nd statement (i.e. When your model predicts Churn to be true, it is correct 98% of the time), is this “Precision” in Machine Learning?

2) For Classification (Target col = Yes/No), in the case of imbalance data in the Target column (e.g. 80% is Yes, and 20% is No), will the predictive model in Appsheet do some balancing method to tackle this issue?

The result shown in Question 1 is run with about 5600 rows of data, with 83% is Yes and 17% is No for the Target column. I found the result is too good, which make me wonder if it’s really the case, or is it because of the imbalance data issue.

3) If Appsheet models do not handle the imbalance data, any suggestion how to tackle the imbalance data manually before inputting the data into Appsheet, if we really don’t have sufficient data for the minority case?

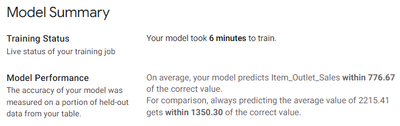

4) For Regression result as follows:

Can I check whether 776.67 is actually the value Mean Absolute Error (MAE)?

5) Does the models in Appsheet remove / tackle the outliers during the training?

Thank you so much in advance! 😊

- Labels:

-

Intelligence

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I work on the ML team at AppSheet. I can help you with these questions.

1.a) This value is recall.

1.b) This value is precision.

2) No, we do not currently attempt to correct imbalances in your data.

3) Handling class imbalances is a tough problem in general. Since we don't currently expose any controls on the training parameters, the only way you can affect the outcome of training right now is to change your data. You could try removing some of your over-represented rows, but this is really only viable if you have a large enough dataset to capture the underlying data variability even when downsampled. You could also try duplicating some of your under-represented rows. Note that you can train your model on one table and use it to predict on a different table. Just ensure that the column names are the same between the two tables.

4) Correct, this value is MAE.

5) Outliers are not removed, however numeric values are standardized which helps to reduce variance introduced by outliers. You may still want to experiment with training using data where you've removed the outliers yourself and compare the performance.

Regards,

Mike

-

Account

1,677 -

App Management

3,100 -

AppSheet

1 -

Automation

10,325 -

Bug

984 -

Data

9,677 -

Errors

5,734 -

Expressions

11,782 -

General Miscellaneous

1 -

Google Cloud Deploy

1 -

image and text

1 -

Integrations

1,610 -

Intelligence

578 -

Introductions

85 -

Other

2,906 -

Photos

1 -

Resources

538 -

Security

828 -

Templates

1,309 -

Users

1,559 -

UX

9,113

- « Previous

- Next »

| User | Count |

|---|---|

| 43 | |

| 30 | |

| 24 | |

| 23 | |

| 13 |

Twitter

Twitter