- AppSheet

- Tips & Resources

- Tips & Tricks

- Re: Import data from CSV

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

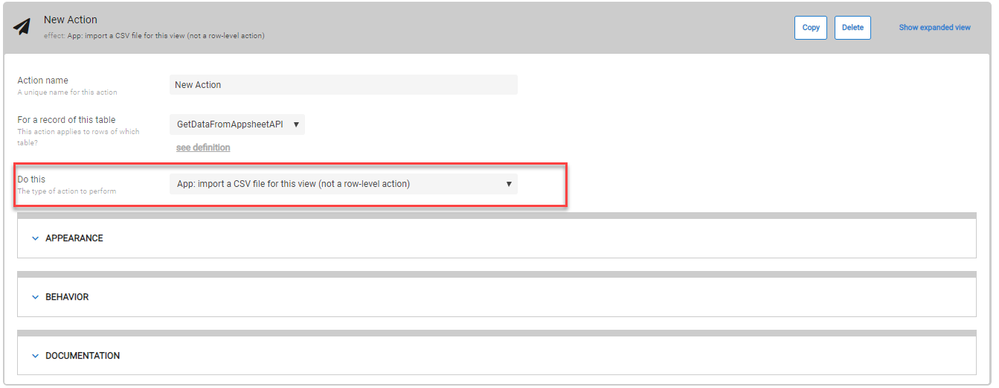

I conducted quick testing for New Action of “Import CSV file” as it seems to be functional right now.

I save the CSV file (made out of Excel) and saved to local. This CSV have first row with exactly same names of column against the target table

This is observation so far.

-

Add new row

It works. Make sure to add “unique value explicitly” on the csv file otherwise this action wont add new rows (for rows missing the key value)

Even we have initial value set and leaving the value in cells for key field in CSV as null/blank, action will not upload rows. Suggest to Appsheet dev team to review their code to let the action add new row even missing the cell key value while we have initial value set on the column definition on appsheet editor.

-

Update existing row

If the value in key field in CSV matches the one in existing data key value, then the uploaded rows from CSV will update the existing row. Lovely

- Key value missing

See comment in 1). If we miss the value for key, either existing or new, this new action will not add new rows.

- Data type mis-match

For instance, we have number type on Appsheet editor, but we have text value for that field in CSV, upload csv seems to be failing. Meaning nothing is going to happen is data type mismatch is detected.

- CSV file type sensitive?

Save in the format of CSV UTF-8, and upload to Appsheet, it works. Other CSV file MS DOC does not work.

Those were the results of quick test withe super simple use cases . Hope other community member will add something new findings.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure, the number of rows (like 400) cause the problem, I suspect the operation could be forced to be timeout on the backend, but let s wait for clarification from our professionals. (@Phil)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We literally developed this functionality via Gcripts 3 weeks ago for a client… never noticed it before. ![]()

Well - at least we’ve got native support now ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is a status update on the CSV Import feature.

I released two changes this week:

- CSV Import is now rolled out to all customers.

- The Open File dialog that appears when you click the CSV Import button was displaying many file types. It now only displays .CSV files.

I hoped to release the following changes this week, but the support load is intense and I have not had time to complete testing. I hope to complete testing this weekend and deploy the following changes early next week.

- You will be able to omit the value for a key field having an initial value. The system will assign an InitialValue to the newly added record.

- Some invalid input data values were not being detected. For example, if you entered a text value in a numeric field, we did not report an error. Such errors will now be detected.

- In found some cases where InitialValues were not being applied properly. They will now be applied.

I also hope to significantly improve performance when adding records to a SQL database. CSV Import first checks each CSV record already exists in the target table. If not, we add the CSV record to the target table. If so, we update the CSV record in the target table. I plan to improve the performance of the SQL queries we do when checking whether the CSV records already exist.

I have a theory that may explain the “Action Failed” error when you import a large number of records. I have not yet confirmed this theory. When you add a large number of rows, CSV Import is spending a lot of time checking whether each row exists. This can cause the client to time out before all of the CSV records are imported by the server. The client then displays “Action Failed”. Despite this, the server eventually completes the CSV Import, and the records are added or updated to the target table.

If this theory is correct, the performance optimization I hope to make when adding records to a SQL table may improve the situation.

I recommend waiting until these changes are released before making extensive use of CSV Import.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Phil,

Thank you for working tirelessly to bring improvement to this new features. I wish to implement action to upload csv to my production app, but I refrain from doing it until I get full confidence that it works perfectly.

I tested further, those are observations which may help you.

For error message “Action failed” seems to be posted when we have high number of rows, although it dont have exact number of rows which start to pose this error message. Just few rows in CSV wont post the error, but hundreds and thousands of rows, yes it will.

However, it is strange the rows are ADDED even we see this “action error” message, as @Maruti_Cei commented.

When I add rows with the same key id, i.e. test to the set of rows which are already in Google sheet and different values in other column (none-key), then I see this error message, but data is actually NOT updated.

Another test.

I placed date type column and on CSV file I placed the text/strings on this field for one row, and rest of row it store the date type values.

Action to upload CSV runs fine. No error message was posted. Checking the data source, and the date values are added as they are, while the text value (on date type field) was found “null” in the cell. So obviously the value in CSV file which type is conflict with Appsheet data type seems to be ignored, which is reasonable.

But THe app user have no way to notice this.

When the import CSV action fail, i.e. not do the job, either add or update the rows, then error message should pop up with error message to indicate .

In the same way, then the row values are ignored to either add or update to due to mismatch/conflict with the data type, I think Appsheet should pose error to App user to tell them “hey, you uploaded the data, but some of the data may lost during the process due to data type mismatch”. some thing like that.

This is not a error message, but once the action/job completed, then App should post alert message to notice to the user “hey, upload is now completed”.

Now this import action is kind of silent job…

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I will be rolling out two Import CSV changes over the course of the next two weeks.

The first changes significantly improves performance when adding records to a table which uses a SQL database. This performance improvement applies when adding any number of records using either the IMPORT CSV command or the REST API. It is especially helpful when adding a medium to large number of records to a SQL table. If you wish to experiment with this change, please provide your AppSheet account id and I will add you to the rollout. I recommend that you use a test account and an experimental SQL table while doing such experiments to avoid any risk to your production application. I have tested this improvement extensively, but this is a fundamental change and it involves some risk. Alternatively, you can wait until this change is gradually rolled out over the course of the next two weeks.

The second change improves field value validation when adding or updating records using either the IMPORT CSV command or the REST API. If you wish to experiment with this change, please provide your AppSheet account id and I will add you to the rollout. Again, I recommend using a test account if you choose to do this. Alternatively, you can wait until this change is gradually rolled out over the course of the next two weeks.

If testing goes well, I will be releasing a third change tomorrow afternoon that improves the dialog displayed by the IMPORT CSV command. It will now display an error message, if an error is detected by the IMPORT CSV command. It will display the number of records loaded if the IMPORT CSV command is successful.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you please add me koichi.tsuji@vendolasolutions.com for testing those new release?

Throwing error is surely helpful.

Adding number of rows is quite heavy operation I would say. In case, the user got error message, for instance, some of the rows are not really added due to some error, it should be too late for user to cease the import operation and the rows could have been already added. Then there is no UN-DO operation/option for now. When I see some error, I probably work again on CSV file and push correction based on error message, and re-import the file. But this will duplicated the nubmer of the row.

To avoid this, I suggest that we add process before the app firmly add/update the rows, meaning the app/update to the data source will only happen upon confirmation by app use explicitely.

Steps something like this.

-

Hit action button.

-

File picker to open

-

The user selcect file and hit go.

-

Data on CSV fies goes to somewhere the tentative storage, rather than being pushed to the data source at this point of time.

-

Upon saving data “tentatively” to intermediate place, then dialogue opens, which displays ; \

a) IF there is no error, then “No Error”. In case there are error, the description of the error.

b) Sample set of the rows (like a preview) -

Then dialog holds buttons

a) Cancel operation (this will not add/update the data to data source, the data in intermediate storage will be cleared.)

b) Proceeds, .i.e. to save/update the data regardless of with/without error.

For instance, I add hundreds or even thousands of rows by Import CSV, I wish to get the confirmation message, before proceeding further, step by step.

Hope this makes sense across all the users who may use this new features.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Import CSV command first validates all of the rows in the CSV file. If any errors are present, it reports the first error it finds and stops. It only does the Import if no errors are present. Admittedly, if many errors are present, this may be burdensome because you only see the errors one by one.

My primary goal at this stage is to resolve the speed problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It makes sense to get the feature up and running in a solid format, then later accentuating it. It would benefit from some for of column picker/pairing situation. Then following that a review stage… but that’s all not immediately necessary. Appreciate your work on this!

We are currently leveraging the API, if you add my account to the roll out, could it potentially geek up some of that? Or would we be good to test this on our account, just in non production?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Phil,

Yes, thing should happen one after another, step by step.

Once the error is detected the operation ceases, which should work as safe guard to protect the wrong data (unexpexctedly) uploaded to the data source and force the user to work on app to delete data one row and then another… which will be hours and days jobs, but it can be avoided by validation process like that.

In the meantime, SQL and speed stuffs you mention right here, I m not perfectly sure what it is.

On this thread, we are addressing “import CSV”, and you are introducing new feasture to improve the speed to dump large amount of data to SQL. For instance, add and update thousands or rows via CSV import, and then will improve the performance at the end, likely massively in case app is hosted over the cloud sql.

This is also valid and applied when we add / update large amount of rows through APIs as well?

I suppose you answer should be yes.

Im wondering if there is also improvement of the performance of Appsheet “Action” to add new rows, update bulk rows which is similar operation like API, CSV import.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Grant,

I am being cautious in recommending that you test using a test account and test SQL data. If I add your account to the rollout, it affect adds and updates done via Import CSV, ETL, or the REST API if your storage provider is SQL.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Koichi-san,

The original customer problem reported that Import CSV into an AppSheet table that used SQL was taking too long. They reported that if they loaded a few hundred rows, the Import CSV dialog reported success but the import operation took too long. They reported that if they loaded a few thousand rows, the Import CSV dialog reported failure but eventually the rows appeared to load.

When I investigate this problem, I learned that the performance problem was not specific to the Import CSV command. Performance was poor whenever we added lots of rows to any SQL based table. This was true whether the rows were being added as a result of a Import CSV command, an ETL operation, or by someone invoking the REST API to add many rows.

When we are adding rows, we first check to see if each record already exists. If it does not exist, we insert the record. If it does exist, we update the record. I was able to significantly improve the performance of both checking whether each record exists and adding the record. We now check whether the added records exist by doing a SELECT WHERE query that specifies up to 200 keys at a time. If you have more than 200 added records, we do this in batches of 200 keys each. As a result, the performance depends on how many records you are adding and how many records are present in the existing table. It is IMPORTANT that you have a SQL index on the key fields to make the SQL SELECT WHERE query as efficient as possible for SQL to execute.

As a result of these performance improvements, I was able to significantly improve the performance of Import CSV, ETL, and the REST API when adding records to a SQL table. When I loaded 500 rows into an empty SQL table it reduced the load time from 6 minutes 45 seconds to under 4 seconds. I was able to load 3000 rows into an empty SQL table in 16 seconds. If you have existing rows in your SQL table the load times will be higher. The more existing rows in your existing SQL table, the slower because they need to be checked when determining if the added rows already exist. That is why you need to have a SQL index on the key fields to make the SQL SELECT WHERE queries as efficient as possible.

While I was testing my change, I noticed that the Import CSV dialog box had two problems. It was not displaying an error message if an input validation or other error occurred. It was not displaying a success message if Import CSV succeeded. I updated the Import CSV dialog to display an error if any was detected. I updated the dialog to display a success message and to report how many rows were loaded if the Import CSV succeeded.

As a result of your Import CSV testing, I investigated and fixed a few other problems affecting Import CSV. These included automatically assigning key values to new added rows when the key value was omitted provided an InitialValue was defined for the key field. I also fixed other data validation problems which you and I found.

To fix these problems I had to make fundamental changes. I tried to test these changes carefully, but there is risk with every change. These changes are no exception. We cope with that, in part, by rolling changes out gradually.

In this case, I am offering to let those of you who want to try these changes early access to them. I can enable these changes on an account by account basis. If you wish to be added to the rollout, your entire account and all of the application within it will see the effects of these change. That is why I suggest that if you wish to try the change you use a test account, a test app, and test SQL data. I can then enable these change for your test account. Once you have confirmed that the changes are working for you test application, I can add you production account to the rollout.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When we import, what is it looking for, simply a column name?, what if they are out of order, gaps, etc.?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Phil - san,

Thank you very much for your detailed explanations, I m now fully cathin up wiht you I hope. I will create new appsheet account for testing purpose, and will get back to you separatetly to conduct testing. Yes, all my production app is running on MS SQL as data source, better to avoid to conduct testing on the same account where we may see unexpected behaviro which may interfere the use of prouction apps.

The general performance improvement is surely good news for users like me, hosting data on SQL instead of Sheet.

For now, I came up with two questions I hope I can get your advice over.

- SQL index

It is IMPORTANT that you have a SQL index on the key fields to make the SQL SELECT WHERE query as efficient as possible for SQL to execute.

To improve the performance in general, I will take the suggetion to make key fields as index. I just wondered if I should make the other fields which could be employed afte WHERE clause on SQL select statement. For instance, I do have date fieds, and on appsheet expression i filter out the older data whose date filds is older than X date. In that case, I assumed it will improve the query performance (both on SQL and Appsheet engine) by making “date” fields as idex as well as key?

Or doing so will not affect any performance at the end?

- Possible workaround to make the performance far better.

I understand the technical mechanism in terms of how the rows are added and updated by API , CSV import. So all in all, the update/add operation against the table with “smaller amount of exisitng rows” should perform better rather than table with tons of existing table.

To improveperformance better, I thought there is possible workarond like this

a. First, on the SQL server, create a VIEW out of table we wish to add new rows by API/CSV file.

b. This view is made out of table with filter condition, where the matched rows is marginally zero. For instance, filtering by date filter, with condition, date <~ 2100/1/1 something like that, and returning zero row as we dont have existing rows mathing such condition.

c. Read proper table as well as this VIEW to Appsheet.

d. Create action for CSV file import, but this action is associated to VIEW instead of Table.

Whenever the import csv file is fired, then the row will added to VIEW instead of table, where the existing rows are basically ZERO all the time, and which will make the performance far better? The uploaded rows to this VIEW will be ruled out after import opration as it does not matching the condiitions.

If this works, then in case of “adding new rows” ops will perform better? although it wil cause the problem when we UPDATE the rows though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Match on column name. Column order not important. Omitted columns allowed, provided required fields without InitialValues are specified.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I’ve got an issue where I have an app formula set to NOW() - I wanted this to trigger only if there was an actual update… it appears this is getting updated even if nothing about the record changed. So it appears that some form of updates are triggering even if nothing had changed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why not use a ChangeTimestamp instead?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I should actually be using that… (But, an app formula shouldn’t trigger unless the record is updated… right?)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It’s still a bug that needs to get fixed, I just noticed you had a more explicit and exact need of the ChangeTimestamp instead.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Correct.

Actually, let’s think about an edge case… Suppose updates come in for a row, so the saved row is loaded, the updates are applied, and the app formulas are evaluated. Suppose the “updates” just happened to be the same values already present in the row, so following the “update”, none of those column values changed. But the columns with app formulas may have… Thus, the row has changed and the updated row is saved.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I typed my previous response, I was thinking of exactly this, but couldn’t remember how to describe it at the time.

Also, I doubt any performance improvements could possibly come from @Phil having it check if any of the values for that record are actually changing, and dropping the update if all the data matches. But I don’t know for sure.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

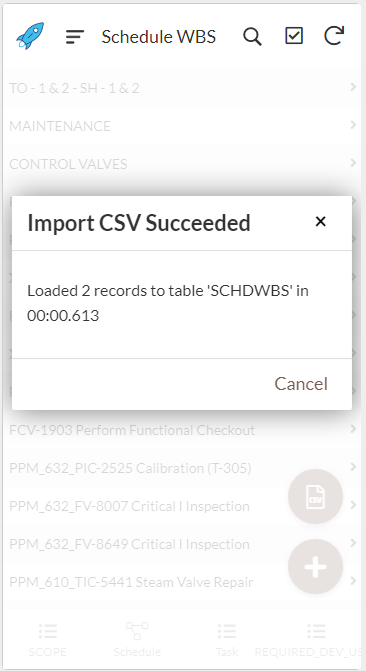

A little testing this morning. (Note, I’m using Oracle DB) When I loaded 2 records, the app instantly went to “Starting Up” screen, and then provided this. One thing is that the dialogue box only has option for cancel, which is misleading.

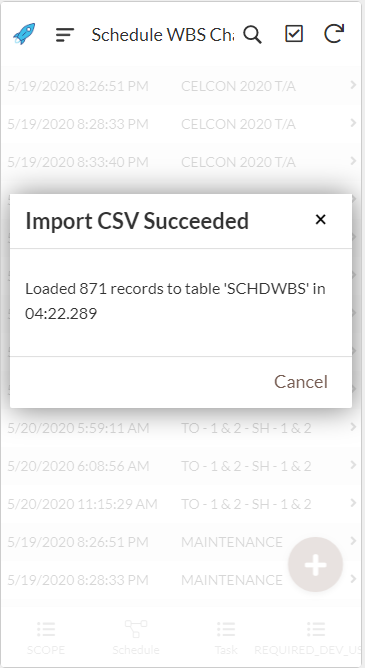

When loading in a file with 871 records, there is no catch, or feedback that anything happened. (I have the biggest urge to keep uploading the file over and over again) After a few minutes when I decide to sync, the records are indeed there. Then, all of a sudden after perusing the app for a few minutes, this dialogue pops up:

In general I would rather have some control of this dialogue box, or at least be able to define a “Display Table Name” I’ve ran into this in other places, a table display name would tidy that up a bit. (But of course control over this message/variables at the action level would be cool)

One more thing, it seems the CSV Import Action is pushing a different time than it should be. The top record was manually edited, and the bottom record was updated by the import moments before.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maybe I need to play with it a bit more… maybe some finesse this time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Phil @Steve @Bahbus I went ahead and created a super long video (10min) showing what I’ve got going on. Would love to get this even semi-functional, especially from the change table perspective. I stripped out all my expressions, and set the changetimestamp to watch the main columns. It is still catching a change when rows are “Overwritten” Let me know what you guys think the best way to setup a change table is.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hmm, I had assumed ChangeTimestamp would internally run some sort of quick BEFORE<>AFTER in order to actually trigger.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same, but apparently it’s just snagging “did it get updated” unless I can figure out that "Changed values section, but I don’t see that anywhere in the docs…

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Grant,

I have been tied up on other customer issues and have not gotten back to Import CSV.

I have added your account to the Import CSV rollout.

If testing goes will, you will be included in the rollout early next week.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there a demo app we can try for this please?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don’t think any demos exist for it yet.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Phil

Could my account please be added for Import CSV when you get back to it, 129805, thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Phil Does the import CSV care at all about security filters?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello! I was working with CSV functionality and I realize that if I use the NOW() function i got different timezone (+5 forward).

I set correctly the locate options in table and in Gsheet options.

Maybe this is an affection regarding the implementation of UTCNOW()? Because is the same datetime.

Thanks in advance.

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello! If possible somebody could try to replicate this issue? I have an UploadDate column with initial value NOW(), after upload the CSV i realized the date is +5 hours forward and its the same as UTCNOW(). My locale config think is OK, since is the only table that i got this different date.

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Lynn,

Just saw you request to be added to the Import CSV rollout.

Sorry I did not see it earlier.

If testing goes well, you will be added on Monday afternoon Seatle time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great, thanks @Phil

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @Jonathon this would be a workaround.

Perhaps I’m wrong, but I think using a CSV directly as a Data Source would speed up the sync time.

Especially when you use it for read_only like we do.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Phil

Could you please added our account, 253034, for Import CSV? Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I checked in three changes to Import CSV today. If testing goes well, those Import CSV changes should be deployed on Friday June 12, 2020 in the afternoon Seattle time.

- There were problems when using a composite key if those key columns were not at the beginning of the record. The key columns can now be anywhere in the record.

- As Grant and others pointed out, I was not displaying a dialog box until the Import CSV request completed. I now display a dialog box as soon as the Import CSV command is invoked. The dialog box simple says Starting Import CSV for Table:

<tablename>. Some people have asked for a dialog box with a progress bar. That is really hard when a client is invoking a web service. The client and server are using a simple request/response mechanism. There is no easy way to exchange progress information between the client and the server. - I have enhanced the dialog box that is displayed when the Import CSV command finishes that displays the number of records inserted and the number of records updated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good stuff!

Thank you and I will carry out some testing and will get back here with the feedbacks.

Have a great weekend.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Koichi-san.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jayaram,

I have added you to the Import CSV rollout.

If testing goes well the change should be deployed on Monday afternoon Seattle time.

-

Account

6 -

App Management

22 -

Automation

187 -

Data

141 -

Errors

19 -

Expressions

206 -

Integrations

104 -

Intelligence

18 -

Other

57 -

Resources

24 -

Security

14 -

Templates

56 -

Users

20 -

UX

222

Twitter

Twitter