- AppSheet

- AppSheet Forum

- AppSheet Q&A

- API Workflow: Add Rows to Another Table

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

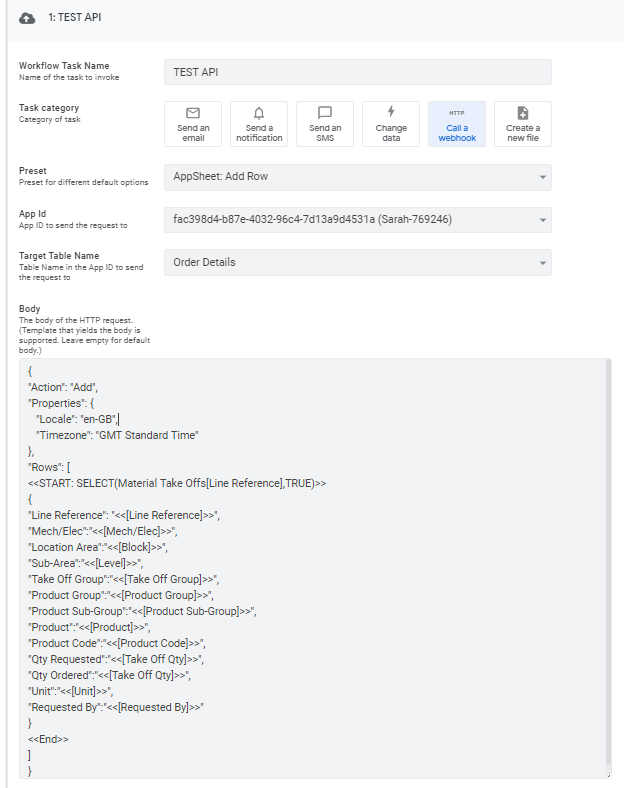

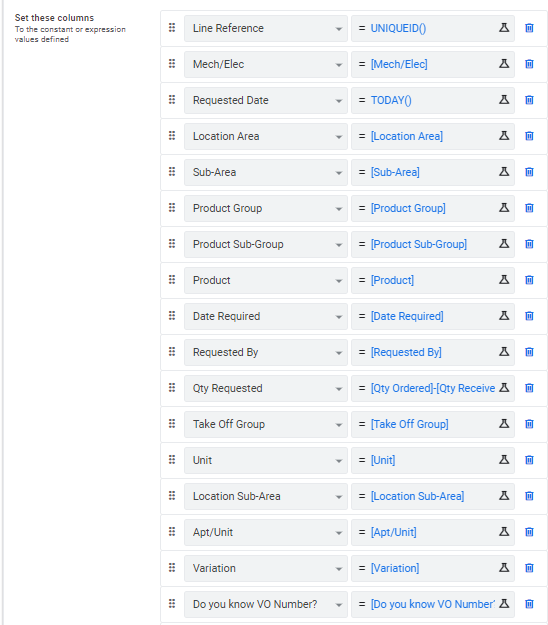

I’m currently testing out a batch add row workflow using a webhook.

I had previously been adding a selection of rows using the add new row to another table action. I have found this was taking considerable sync time and disturbing other app functionality when applied.

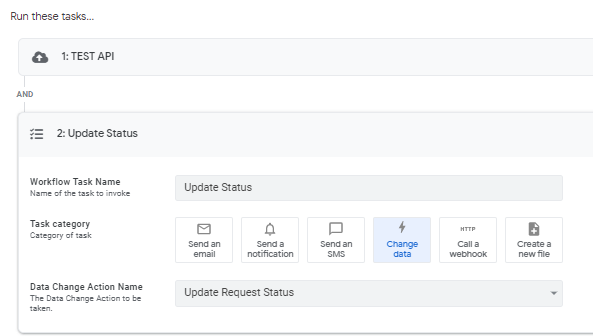

I have been trying to use the workflow with the following process;

- The rows are selected and an action to update the date/time of the rows updated

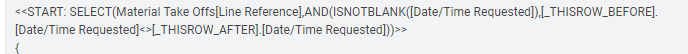

- The condition the workflow is checking is whether or not the date/time value has been changed from previous version using this formula

AND(ISNOTBLANK([Date/Time Requested]),[_THISROW_BEFORE].[Date/Time Requested]<>[_THISROW_AFTER].[Date/Time Requested])

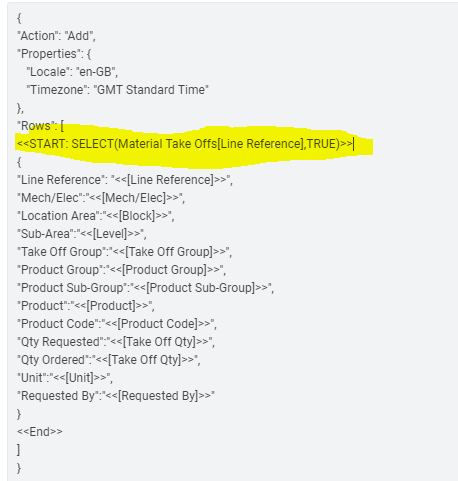

- This then triggers the workflow to add row using the following

- After the workflow adds the row the rows should update the status from ‘To be Requested’ to ‘Requested’

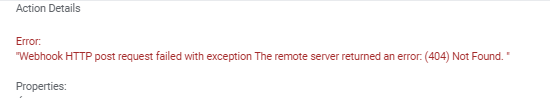

This is taking even longer than the previous action and I’m receiving this error in the log.

"Webhook HTTP post request failed with exception The remote server returned an error: (400) Bad Request. "

I can’t seem to find an appropriate action to trigger the workflow. Is there someway to know or record when the action is fired?

- Labels:

-

Automation

-

Errors

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

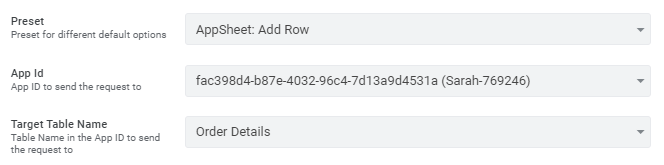

I’ve never had any luck with those ‘Preset’ AppSheet:__ settings. It was too buggy when it was first released so I just use ‘Custom’ and insert the endpoint URL manually.

I decided to give it another try this morning and created an AppSheet: Add Row webhook, then ran a few tests.

- Execution times were excessive (~60 seconds)

- 400 Error on one attempt ( “Errors”: “Error: AppSheet Webhook action depth reached”)

I would suggest trying the ‘custom’ webhook, or maybe see if the new AppSheet Automations would work.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

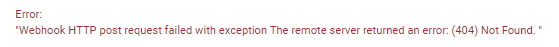

Thanks for the help, I’m still receiving the same bad request error in the log with the custom webhook

"Webhook HTTP post request failed with exception The remote server returned an error: (404) Not Found. "

Is it something to do with the URL? I wasn’t sure I had done that properly is there a particular URL I should be inputting

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

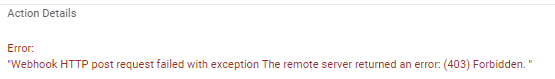

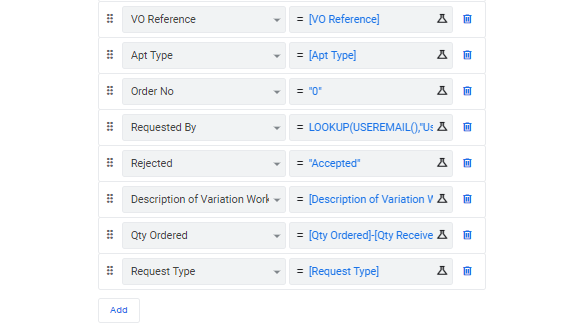

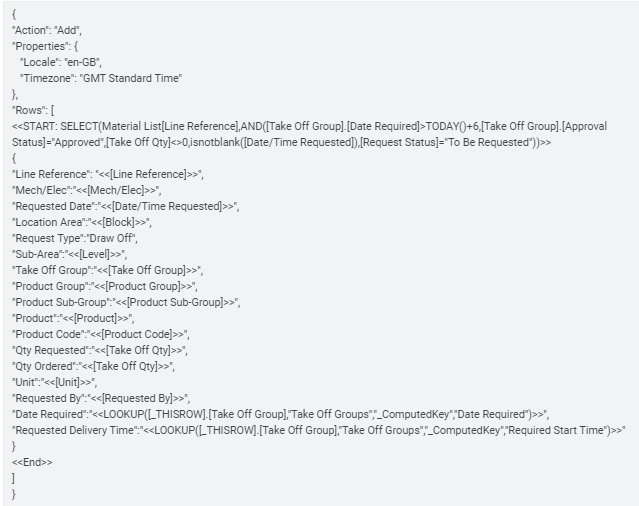

This is the JSON text

I think the SELECT formula thats causing the issue, I don’t want the entire table to be checked just the selected items that the action is applied to so its timing out

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is that the default template that was generated by AppSheet? Maybe just change the TRUE to filter out for your ‘selected items’.

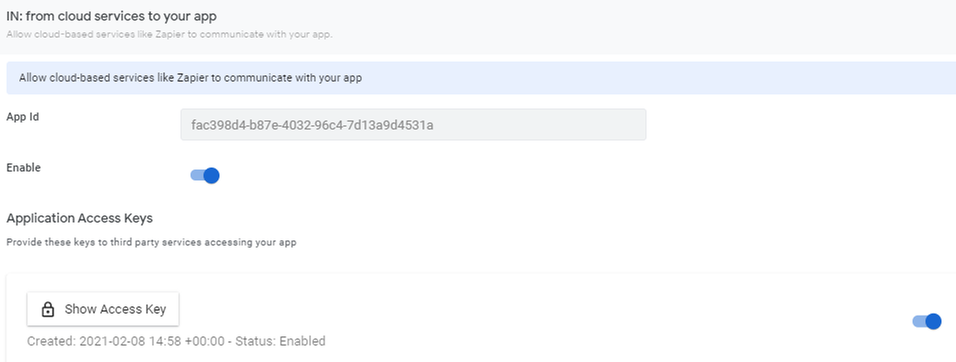

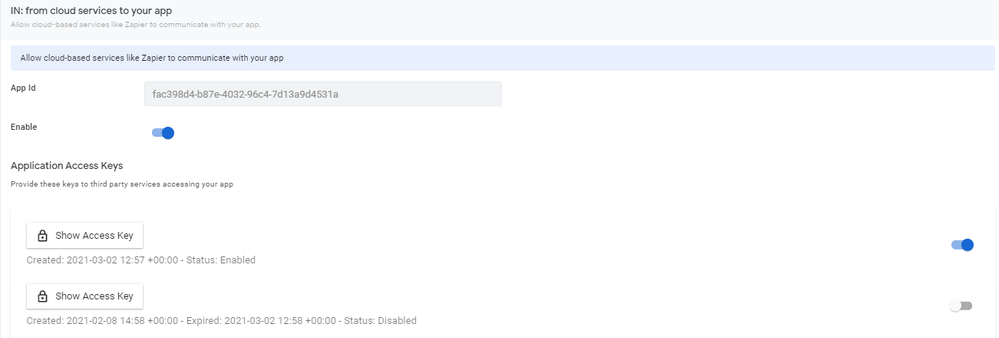

The 400 error is a separate problem, though. Is the API enabled, under Manage Integrations?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes the API is enabled.

Okay so remove the TRUE or replace it with the condition formula?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you read the whole table like you have, and the table is big one, it could cause this as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried switching the TRUE statement with the condition

But I’m still seeing this in the log

any idea where I’m going wrong?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If the formula generates a blank list, it will produce an error… though if I remember this correctly, it gives “Bad request” rather than 404.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Aleksi I have a question.

Recently I’ve been seeing an increasing amount of people making use of the API for operations that could be achieved using actions; so it’s got me wondering?

What is the main differences between using a webhook to add these rows vs. an action?

To me, it seems that the webhook operation from this post (and all the others I’m referring to) could easily be switched to a reference action that creates the rows - and perhaps reduce some of the “coding” necessary.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Using the action to create rows in another table is taking significant sync time e.g. 18.5 minutes to do 46 syncs, some syncing is taking over an hour due to the large number of items they have selected to add.

We have noticed a pattern between users performing this action making the whole app and in some cases other apps under the same datasource, unfunctional. We receive an error “Too many requests being sent”.

Thats why we have been looking into API now

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As Sarah explained, if you have more than just few records to add/update, it doesn’t matter. But if you have lot of, it’s better to bulk calculate them for example with the Start: & End expression.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A very good reason. (^_^)

Perhaps there is a way to adapt your data schema to reduce the number of records necessary?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

At the minute the Table I’m taking the rows from (Material Take Off Table) has 15,327 rows

The Table I’m adding them to (Order Details Table), contains 16,789 rows.

Does that seem like a lot for this action to run smoothly?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah… that’s a fair amount of records to deal with.

- Do you use slices to create subsets of data?

For example:

First

I’m always advocating against special characters in column names, as_well_as_removing_spaces_for_underscores.

Just throwing it out there, that forward slash inside the column name could cause some problems as things get more and more involved. If you ever port this over to a SQL, it will complain about that in a big way; just FYI.

In this formula, you’ve got the condition isnotblank([Date/Time Requested])

I would split that off into a slice

- this way when you do a SELECT(), instead of doing it over the whole table, you can use your subset and “lighten the load.”

This is probly the most forgotten, or unknown, thing to do with AppSheet apps. The source of almost everyone’s problems is due to some sort of SELECT() they’ve got running somewhere - and it’s taking FOR-EV-ER. By creating data subsets you offset when calculations are done, greatly increasing the efficiency of everything invovled.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I created a slice with the condition isnotblank([Date/Time Requested])

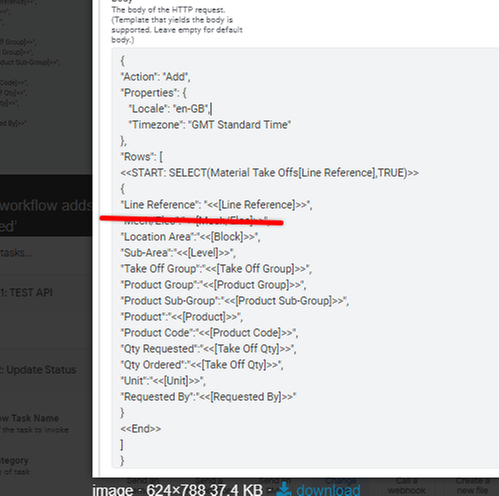

I tried to add 4 records, which was 4 syncs that ran for about 1 minute. But nothing added to the Order Details table due to this error from the editor

There were only 23 rows that met the slice condition, so not sure where I’m going wrong.

I think the workflow itself just isn’t adding to the correct table ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- 200 Success

- 400 Bad Request

a. The Application Access Key is missing.

b. The AppId is missing.

c. The Post body contains invalid data. - 403 Forbidden

a. The Web API is not enabled for this application.

b. The Application Access Key is invalid.

4. 404 Not Found

a. The application specified by the AppId cannot be found. - 500 Internal Server Error

a. An unexpected error occurred.

In view of the error code, it sounds the issue is associated with accesskey or something.

Is your integration setting and turned on and the fresh access key available?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes I think so

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Create new access key and try a shot with it

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there something additional I’m missing here, I have the API enabled, the access key is enabled.

This is the URL in the workflow

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

All the configulation you made looks fine to me.

I wont want to recommend you as it will add more of works to you, but in the past I did when I face similar issue.

Just copy json body.

And delete the workflow and create from scratch.

I remember it got through at that time, it is funny story though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This seems to be the fix for more than just workflows sometimes.

- Views, actions, workflows… anything that has a “digital element” inside the AppSheet editor - the little container thing we can expand/collapse.

- It’s like, sometimes these get corrupted and need to be deleted in order to fix.

(This is rare though)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, still no luck with it unfortunately ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try this:

Remove the [Mech/Elec] column from the body - see if that forward slash is causing the problem.

Ultimately it looks like everything else is fine… which leaves either:

- something’s wrong with the body payload; or

- it’s a bug.

You may also need to remove the dashes as well from [Sub-Area]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @MultiTech_Visions ,

Your recommendations/suggestions are always very insightful. May I ask the following?

So is this understanding correct that if there is a SELECT() to be run on large data set with conditions such as isnotblank([Date/Time Requested]), better to first create a slice and run any further SELECT() required on that slice?

Also if an app requires two SELECT() s to run on a table, first select with condition A, B , C and the second one with A, D, F, then is it better to create a slice with condition A and then run two SELECT() s on remaining nondependent conditions?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Absolutely! But not just for large data-sets - this is how everyone should be building their apps.

When making the original AppSheet videos we missed this

When we were making the original AppSheet videos 5 years ago, we completely missed covering this topic.

To be fair to myself, I had no idea this was a thing that we should do. It wasn’t until I started working with clients to create complex database style apps, that I realized how much of a performance hit manually constructed SELECT() formulas have. Praveen had mentioned something like this over and over, but it never really made sense what he was talking about - until I was forced to deal with poorly performing apps.

In your traditional database data schema, you’ll create “views” - which are essentially Slices in the SQL world - that hold these subsets of data. They become extremely helpful when you’re implementing complex formulas that need to run over large amounts of your data.

Learn more here:

https://www.essentialsql.com/what-is-a-relational-database-view/

The ability to create efficient data subsets is a skill that’s missing from most AppSheet builders’ toolbox.

The point is to take the universal conditions from your select, and hold that sub-set in a slice.

- This migrates when those calculations are done.

- With a

SELECT()the criteria is being evaluated "once for every row for each record being evaluated";- Because your

SELECT()is running all that criteria for each record it’s considering.

- Because your

- When migrated to a Slice, the criteria is being evaluated “once for every row during updates”

- With a

This becomes especially helpful when combined with REF_ROWS()

- Instead of some

SELECT()running on each record, you create a subset (slice) and ref_rows to that.

![]()

![]() &

& ![]()

![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much @MultiTech_Visions for a detailed reply and the informative video that made the concept crystal clear.

So if I may sum up, the trick is to use the complex expressions to filter rows in the slice and then use REF_ROWS on those slices. Excellent tip.

I was incidentally testing some other expressions on a large dataset couple of days ago. I will test with the slice_ REF_ROWS tip shared by you and revert with any observations or queries I may have.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much @MultiTech_Visions. @Sarah_Keown, hopefully this will solve some of our issues.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

(A little more info would help clarify this one…) but in this instance I would actually create two slices:

Slice A

- Conditions A, B, C

Slice B

- Conditions A, D, F

Then run any of your SELECT() or REF_ROWS() over those instead of the main table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think the tip shared by you will be applicable in this case as well. Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@MultiTech_Visions, slices are evaluated on the client side so adding slices will add to sync times. In your experience, is this increase in sync time still significantly less than using Select expression?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The net result is a reduction in sync times when migrating from a bunch of independent SELECT()s vs. slices.

So if you were to put things on a scale 1 - 10 (10 being bad)

- SELECT()s

- I would put at least above a 5; 6.5 is a good number (but they can quickly rise into the double digits. haha)

- Slices (which largely depends on the formula you’re using, I’m talking about simple equalities or IN()s, with some AND() and OR() mixed in - no SELECT()s)

- I would put at a 3

Again, it largely depends on the formulas you’re using.

- What you really have to take into consideration is how many records is the

select()running on?- This plays a huge role in how long it takes to run as well.

Welcome to the never-ending balancing act

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all, just an update on this issue as I haven’t responded in some time.

I eventually got the API workflow to work correctly, adding a bulk number of rows from one table to another and updating the status.

The reason behind this upgrade was due to the poor sync speed/performance when applying the ‘Add rows to another table’ action.

We had approx. 50 syncs taking 30 minutes when the action was applied to the process. Roughly 30-35 seconds per sync I believe.

Now, with API its sadly not improved as we hoped with the app taking roughly 45 seconds per sync. I am trying to work on this still but an issue arose today and I was applying the same action.

Using the Add rows to another table action, I was doing a very straight forward copy of 5 rows.

These 5 individual syncs took 1 full minute to process, which doesnt seem like much and is certainly an improvement compared to the previous action but the app will be processing much more than 5 rows, there was a recent example of up to 60 rows being copied.

By that standard it would take about 15 minutes of sycing.

I think the action works very well and is an extremely useful tool for our app but I can’t understand why it is taking so long, espeically when applied in the most basic circumstance.

Any further explanation or understanding of this would be appreciated

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How long does just adding one row take? I’ve never had my add rows take more than a couple seconds each let alone 30+ seconds. Do you have lots of columns or complex initial value formulas? From the API it only seems like 13 or so columns which should take next to no time at all.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Order details table has 61 columns.

The action itself seems straightforward to me as I’m just taking a copy of the data, there is no app formula applied, no complicated lookup or select functions included. See below screenshot of the action. The most elaborate part it the qty requested/qty ordered column values (it contains a subtraction formula).

The screenshot is split so you can see the data closer.

For one record to be duplicated it is taking about 8 seconds according to the audit log?

The API on the other hand, is slightly more complex see below action.

Even with the complexities it still seems like a great deal of syncing? Especially if your saying you haven’t seen times like we have reported.

-

Account

1,675 -

App Management

3,090 -

AppSheet

1 -

Automation

10,313 -

Bug

979 -

Data

9,670 -

Errors

5,726 -

Expressions

11,771 -

General Miscellaneous

1 -

Google Cloud Deploy

1 -

image and text

1 -

Integrations

1,605 -

Intelligence

578 -

Introductions

85 -

Other

2,898 -

Photos

1 -

Resources

536 -

Security

827 -

Templates

1,305 -

Users

1,557 -

UX

9,109

- « Previous

- Next »

| User | Count |

|---|---|

| 46 | |

| 29 | |

| 24 | |

| 22 | |

| 13 |

Twitter

Twitter