- AppSheet

- AppSheet Forum

- AppSheet Q&A

- Data type conversion taking couple seconds

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

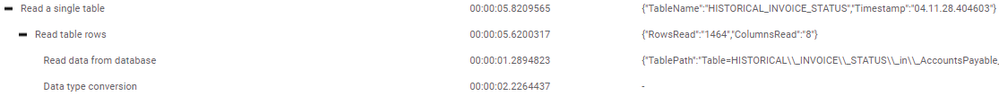

Anyone know how I can avoid data conversion? It’s taking just over 2 seconds on less than 1500 rows but I have 53k rows in the table before the security filter. I have 3 text, 2 ints, a decimal and a datetime so the only one of those I can even imagine it is converting it the datetimes? This is from a mysql database so

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is converting the database row data to “wire format” to send the data to the client.

I tried to optimize it but I introduced a bunch of bugs so gave up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would also like to know if there is a way to speed up or dodge this particular sync slowdown.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I copied one of my apps and the database it was on to test this. It seems to perform the data type conversion no matter what I do. I dropped down to just one VARCHAR column for the UniqueID column and then added a single text column, still converted. @Steve would you happen to know more about this particular step in the Read Table Rows part of the performance log? If not, do you know who might?

I have multiple large tables like @Austin_Lambeth and if we could somehow pre-convert the data or otherwise get around this step it would massively improve sync times.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I know nothing of it. ![]() I’l ask internally and see if I can get a developer to respond here.

I’l ask internally and see if I can get a developer to respond here.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Steve, as you can see in the above screenshot it appears that we could improve our MySQL performance by quite a bit if we could get this figured out.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is converting the database row data to “wire format” to send the data to the client.

I tried to optimize it but I introduced a bunch of bugs so gave up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This can not be a solution. Can somebody answer what is Data type conversion? And how can we avoid it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Has there been any additional development or strategies for this item? I am currently running into this same constraint.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not aware of any changes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is a huge bottleneck for me as well. Mine can take several hundred milliseconds per row. That might not sound like a lot, but I do a lot of bulk operations of tens of rows at once and this adds up to be the majority of my latency, especially since I've spent a lot of time and money optimizing the data source latency.

Can the dev team share more about this wire format conversion tool? Is this some open source component that I can tinker with? Can we enhance the performance profile to show performance of sub-steps if fixing it is too difficult? I worked on performance tooling at Google before the January 2023 layoffs and I'm happy to help here if I can.

-

Account

1,672 -

App Management

3,067 -

AppSheet

1 -

Automation

10,287 -

Bug

966 -

Data

9,653 -

Errors

5,715 -

Expressions

11,743 -

General Miscellaneous

1 -

Google Cloud Deploy

1 -

image and text

1 -

Integrations

1,599 -

Intelligence

578 -

Introductions

85 -

Other

2,880 -

Photos

1 -

Resources

534 -

Security

827 -

Templates

1,300 -

Users

1,551 -

UX

9,094

- « Previous

- Next »

| User | Count |

|---|---|

| 40 | |

| 28 | |

| 22 | |

| 20 | |

| 15 |

Twitter

Twitter