- AppSheet

- AppSheet Forum

- AppSheet Q&A

- Import data from CSV action capability limit

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Let me copin in to @Phil first of all.

What we are doing now is just to simply apply action to import csv file, but against relatively high number of the set of rows and columns to import.

On our testing, the number of rows are 50k ish and column numbers are 25.

When we import such files, the action can be fired as we will see the normal dialogue box when the action start to import the file, but thereafter, App becomes completely sclient where we do believe that the backgroud operation is doing the job. The result is unstable, sometime the operation seems to be finished after 10 mins or so, otherwise we fail the whole stuffs. But App is not throwing any message if or not the operation is success or failed, as App is not throwing any error or success messages at the end when we upload relatiely large set of rows like this. We only know the fact the action failed through the app editor, monitors.

Then back to the question.

Can we get the technical limination where we will fail the import opreation due to the number of the rows/columns, more precisely number of the total cells in attempt to import? or In interms of the size of the csv file?

Let me assume we could gauge the limit which is exportable to Appsheet app in terms of the file size. Then could we get the error or rejection type of the message before the app ueser to proceed, such as “the file size is too large. Reduce the number of the rows to import” something like that.

Currently, the app stay quietn when we upload the large csv file. After 10 mins or so, we get to know if it is ended up with error or sucess through the app editor, which is just lke we are rolling a dice for a luck.

- Labels:

-

Automation

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We understand import csv file is under the “upcert” type of operation. So when we do have bunch of existing rows on the target tables the import csv file operation (as well as add new rows from one table to another table action , which could be naturally equal to import csv action) is highly likely fail due to timeout.

Is there any trick to ensure we will end up with success to bulk number of row import against table with bulk number of existing rows?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

50 rows having 25 columns does not seem like a large volume of data to be adding or updating.

Where does the target table reside? Is it SQL, Google Sheet, Excel, etc?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes indeed. Not ridiculously large data set. For this app, target data table reside in google sheet.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry Phil.

I made a typo.

The row number which is failing is actually 50k (50,000) ish instead of 50.

Is it too large ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Phil san

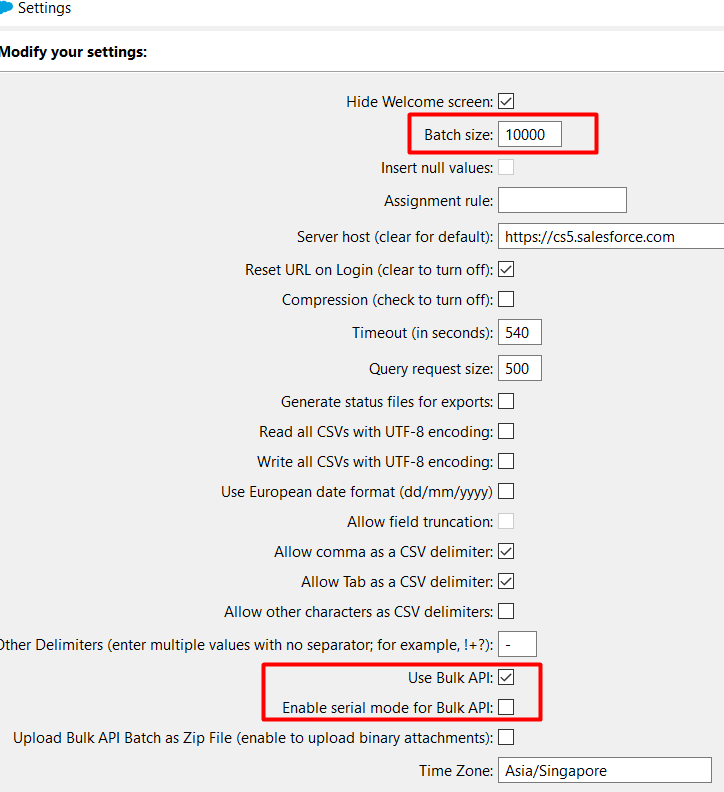

Based on the experiences we have now at the time we fail bulk numbers of data to import through the action, it appears to us AppSheet is trying to add/update (upsert) all of the record as a ONE batch, rather than splitting into separate small batches.

On the Salesforce, there is setting for CSV import funtion to batch size, along with other detailed configs and parameters.

Is that possible AppSheet to split into small chunk of batches to avoid failure of the actions?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah, i was meaning to ask a similar question too @tsuji_koichi, I use CSV import a lot, and the time it takes for the app to fully process and display the rows can vary from being nearly instantaneous to taking 5-10 minutes, and this is for a very reduced amount of rows and columns (5-30 rows with 8 columns).

Is that normal? I’m using google sheet as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Having a similar issue, when testing to upload CSV files of ~2000 rows to a table the outcome seems to be unpredictable. Would be great if there is a better visibility on the upload/action result.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have updated the Import article to include a topic regarding choosing a CSV file size.

See Import a CSV File for This View | AppSheet Help Center

It explains the four minute limit and the work that is included within that limit.

As the article explains, it is very hard to predict the import time because there are so many variables that are application dependent which effect it. The best I can currently recommend is to do some experimentation.

A batch size of 50,000 rows is very likely to exceed the time limit. That is especially true if the Import triggers workflow rules.

I have added a topic regarding Import Taking Too Long in this article Troubleshooting CSV Import | AppSheet Help Center

If your CSV Import is taking too long, please see if the Performance Analyzer can help you find the problem. If you are still having problems and the batch size is reasonable, I can investigate further.

I agree that adding more sophisticated CSV Import batch controls would be useful, but we are trying to focus on improving the performance of Sync, Add, and Update. I think that optimizing those is more valuable at this point. We can always revisit CSV Import batch controls later.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Phil san

As far as “splitting bathes” kinda of new feature is added on the roadmap, I m fine for now.

Thank for updating the documentation, which would be useful.

One more question in this regard. If we have a complex expression in INITIAL value formula, does it also affect import csv function adversely? Or no impact?

For me it sounds a same story as we have complex app formula in VC.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Koichi-san,

Yes, you are correct. We evaluate the InitialValue expressions when doing CSV Import, so the time to evaluate the InitialValue expressions is included within the four minute time limit. If you have complex InitialValue expressions you need to consider that when choosing your batch size. I have updated the CSV Import article to make that clearer.

-

Account

1,675 -

App Management

3,091 -

AppSheet

1 -

Automation

10,314 -

Bug

979 -

Data

9,671 -

Errors

5,729 -

Expressions

11,772 -

General Miscellaneous

1 -

Google Cloud Deploy

1 -

image and text

1 -

Integrations

1,606 -

Intelligence

579 -

Introductions

85 -

Other

2,898 -

Photos

1 -

Resources

536 -

Security

827 -

Templates

1,305 -

Users

1,557 -

UX

9,109

- « Previous

- Next »

| User | Count |

|---|---|

| 42 | |

| 27 | |

| 25 | |

| 20 | |

| 13 |

Twitter

Twitter