- AppSheet

- AppSheet Forum

- AppSheet Q&A

- Possible tip for meaningful keys

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear community,

As I see it, keys do not and should not only serve the purpose of identifying individual records of your database for the sake of the machine, rather they should also serve the human data master in identifying and navigating through his database.

While individual data has no inherent significance for the machine; only their interrelationships matter, the same individual data is meaningful to the human and he can turn it into information.

Speaking about UNIQUEID(), I think it should not be used as the preferred option by default for key column values, rather it should only be used in the cases where no meaningful key can be crafted or to complement a meaningful key, as an additional security measure.

This is a huge topic with a lot to be said about it, so I’ll just write down two AppSheet examples, just to give an idea, but the possibilities are numerous.

1. Finding the most recent row in a table:

You’ll be better off not using for example MAX() or MAXROW() coupled with a SELECT() or equivalent to find the last row and extract a value form it, which “could impact performance” and in some cases you depend on the table’s row number which is unreliable.

Instead, when your keys begin with for example “yymmddHHMMSS” all you have to do is a simple first value extraction from a list and you have the key to the last row handy.

2. Dereferencing

-

When you have a table with rarely updated information, like a table of Services, never use random keys. Use your own meaningful keys. For example: Serv-CAR-01-tireFix, Serv-CAR02-tireReplace, Serv-CAR-11-oilChange and Serv-CAR-12-oilFilter.

-

When you have a Clients list, use one of his unique PIIs as a key, like his ID number or his email.

-

When your Client sign a service order, you can use such a key for this SO:

"yymmddHHMMSS:[serviceID]:[clientID] -

You can construct the key of a Payment for a SO like: [serviceOrderID]:[cashRegisterID]

-

and so on.

Can you see the amount of information you have just by looking at the keys? Now imagine the possibilities you have to make your expressions simpler and your app much faster.

- Labels:

-

Data

-

Expressions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting points, but I’m very much in the meaningless keys camp. The trouble with have any sort of structured or meaningful key is how do you make absolutely sure it is unique and stays unique? For example if the key is yymmddHHMMSS then that’s fine until two records are created at the same time. Likewise with the table of services, not a problem until I think I need to replace tires with a different set (winter/summer) now perhaps I have to add a new service for tireSwap, do I have to add that for every car? What about if the app is used by people in different languages, do we then need to translate the unique IDs as well as the labels? Do we end up adding language to the ID?

For me the rule is very clear, I never put meaning into a key because I can never be 100% sure it will never change.

Edit to add one exception! If the key is defined by an international standard then I will use it. I don’t need to generate my own codes for countries for example!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is where your creativity; the most important aspect of a developer, should kick in ![]()

Outside AppSheet I used to add milliseconds which bring a level of practical uniqueness that I’ve never seen broken in more than 20 years of managing M2M events.

Here, in my app I added userID that cannot do more than one action at a time or cashRegisterID that could not be used by more than one person at a time.

Sorry, I don’t understand. Would you please explain?

I would never show keys to end users. Although user experience is not my domain of expertise I do believe this is bad practice. Keys are for the developer only.

If by “users” you mean people who collaborate with you in app development, then while you might have different mother tongues, you must have a common language of communication for your collaboration to be possible.

At the end, it all pours down to being creative. At the same time I would never claim that there would be a solution for anything in life that would be suitable in ALL cases. Personal preference for me has always been a valid case ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Fully agree, I should have added that, thank you for mentioning it !

I have a countries table and I do use country codes as keys.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just like to point out, these codes do change…rarely but they do.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. Finding the most recent row in a table:

You’ll be better off not using for example MAX() or MAXROW() coupled with a SELECT() or equivalent to find the last row and extract a value form it, which “ could impact performance ” and in some cases you depend on the table’s row number which is unreliable.

Instead, when your keys begin with for example “yymmddHHMMSS” all you have to do is a simple first value extraction from a list and you have the key to the last row handy.

I’m not so sure about that.

Your “first value extraction” depends on it already being sorted.

In terms of code, sorting is expensive, requiring multiple iterations through the same list. Getting a maximum value is much cheaper, requiring only a single iteration through the same list.

If you can guarantee that the list is already sorted, and that list wasn’t sorted just for this one usage, then yes, your “first value extraction” is cheaper.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I’m not so sure about that.

Your “first value extraction” depends on it already being sorted.

In terms of code, sorting is expensive, requiring multiple iterations through the same list. Getting a maximum value is much cheaper, requiring only a single iteration through the same list.

If you can guarantee that the list is already sorted, and that list wasn’t sorted just for this one usage, then yes, your “first value extraction” is cheaper.

Dear @Marc_Dillon, you are totally right!

I was actually rather talking about the impact of SELECT(). I mentioned MAX() and MAXROW() only in the context also since they would not be sufficient to use individually but they would typically be used with SELECT() or equivalent, where the problem is, because we are not interested in the maximum value per se, but we use the maximum to locate another value of interest.

Since it is a list of values that include dateTime, so they come naturally sorted. However, you gave me something to think about.

Because it is an incremental list, the value I desire is the last one, not the first, so I do:

INDEX( SORT([List], TRUE), 1)

instead of possibly:

INDEX( [List], COUNT([List) )

In other languages/environment, sorting a sorted or partially-sorted list is very cheap. But I now feel that I should use the second expression. Right? What do you think please?

Thank you! again…

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As I see it, keys do not and should not only serve the purpose of identifying individual records of your database for the sake of the machine,

I do understand why you bring up your points. However, you probably don’t want to try to re-invent this wheel. DATALESS keys are an industry standard arrived at through decades of experience and numerous headaches caused by business’ that were shut down for days looking for the source of a duplicate key and/or while the datasource was restructured due to the need to support a duplicate DATA value that was used as a key. It’s not an exaggeration. I have been personally involved in a couple of these projects.

To give you one simple REAL WORLD example (there are many). Let’s say there is a Products table and each product has a Product Code. It is tempting to use this Product Code as the row key in the Products table - its unique right?

Well, the business begins using this Product Code as an easy way to identify, and discuss, the product. It becomes a meaningful data value.

Then one day, the business gets a NEW version of a product that replaces the original one. Its different enough that it needs a whole new entry in the Products table BUT the business wants it to use the SAME PRODUCT CODE because that is how all their literature and web sites are created. It’s too expensive to change all that.

The problem? There are already Orders and other rows throughout the system that are referring to the OLD Product by this Product Code as they key. Updating the Product row in the table will point those OLD orders to the NEW version of the Product. Not good! There needs to be a way to have two versions of a Product (old and new) using the same Product Code.

The answer? With a dataless key I can enter another Product row with the same Product Code and it will not affect any of the existing system data. They will continue to refer to the OLD dataless key. Any new entries will use the NEW dataless key to point to the NEW version of the product.

Now, having said all that, most of the AppSheet apps are simple and can get by using “meaningful data values”, probably for many years without any issues. But I am here to tell you, if the app grows and you encounter a situation like I described above or decide you DO need to update how you are using Keys, it can become a major, MAJOR headache. Keys are like the foundation in a building, they are rooted deeply in the system and messing with them can cause major problems for a business. It is best to use them the right way so that you never have to touch them again. Dataless keys are that method.

Instead, when your keys begin with for example “yymmddHHMMSS”

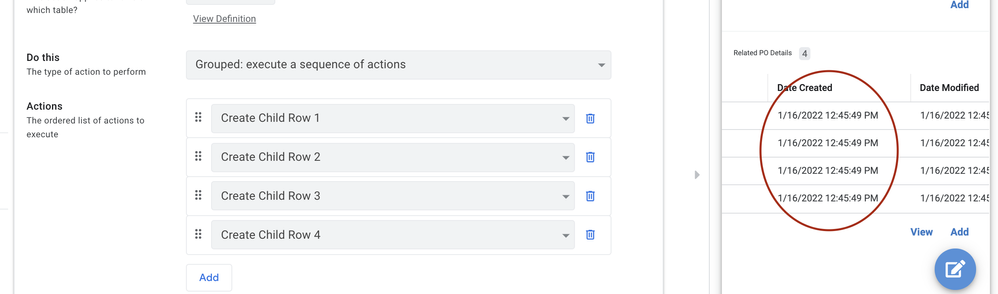

Here is a quick example of why DateTime should not be used as a key. The image below shows a view of Individual rows, created by separate actions, being assigned the SAME DateTime. The actions are in a Group attached to a Form Saved Behavior.

The point, regardless of how or why it happens, it is possible for different rows to get the same DateTime value. It is not a good key value source.

Grouped Action creating 4 child rows with a DateTime assigned to NOW() - All get same DateTime

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello John,

Thanks much for taking the time to reply in such detail ![]()

First let me tell you that I agree, in principle, to your point of view. Additionally, there are some points I’d like to clarify please.

Just like to point out, these codes do change…rarely but they do.

You are right. Country codes are not a good choice. Thank you.

The most important is: it is not about the size of data, rather than making sure record keys are and remain unique throughout the table’s life time.

you probably don’t want to try to re-invent this wheel

Actually, I believe we should, when there’s a foreseen opportunity for improvement, we should, otherwise we would have still stuck with wooden wheels instead of tires. At the end my post I’ll give a real-world example.

Well, the business begins using this Product Code as an easy way to identify, and discuss, the product. It becomes a meaningful data value .

I agree, and with the example you mentioned you’ve clearly demonstrated how this is a bad practice and how this was a bad decision.

However, as I had mentioned, you can still use a meaningful key and ensure its uniqueness by combining meaningful data with a pseudorandom code. So, instead of having a key such as 8870ba24, you can have USB-FM-128G-8870ba24.

Then, when you have a new version of the same flash memory that is faster and you want to sell it at a higher price, you can have a new key USB-FM-128G-03d91e47. That is [productCode]:UNIQUEID(), instead of just [productCode] (big mistake) or just UNIQUEID().

The point, regardless of how or why it happens, it is possible for different rows to get the same DateTime value. It is not a good key value source.

One second is a large span, of course you can a lot of records written in the same second. As far as AppSheet is concerned, I said the key would begin with the timestamp, NOT that the time stamp should constitute the key. The other components of the key would ensure its uniqueness and you can still attach a UniqueID() to the key.

Away from AppSheet, below is some account that some might find interesting:

Two cases of writing to local disk

In more than twenty years of handling M2M transactions, using milliseconds in keys have proven to be of practical uniqueness that I’ve not seen broken. Let me begin with an example.

This is a script I ran on my laptop to see how many times I can write to a file during one second.

% open tempFile w

file5

% open /etc/hosts r

file6

% set chunk [read file6]

...

%. set i 0; set j 0; set count 0

0

% while {[expr $i - $j] < 1000} {

if {$j == 0} {set j [clock milliseconds]; puts "\n\nINIT: $j"}

set i [clock milliseconds]

puts file5 $chunk

incr count

}; close file6; close file5; puts "END: $i"; puts "COUNT: $count"

INIT: 1642492698642

END: 1642492699642

COUNT: 97749

This is the resulting file:

-rw-r--r-- 1 kjseddik staff 209867103 Jan 18 08:58 tempFile

So, during one second, I was able to perform 97,7K write operation into a file on my local disk.

I ran the same test again, writing to my local disk, but instead of writing directly to the file, I wrote a simple server and a simple client on the same computer with localhost as server address. Then I ran the script and wrote from the client, through the socket, to the server, which are both the same computer. Here are the results:

INIT: 1642521861842

END: 1642521862856

COUNT: 3968

and here is the output file:

-rw-r--r-- 1 kjseddik staff 8507392 Jan 18 17:04 serverTempFile

This time, instead of 97,7K iterations, I was able to write 3,9K times only during one second; 20 times less!

Although the data didn’t leave the computer, in the second test, the write operations had to be queued at the network interface even when they were not supposed to leave the computer. Queuing is an intrinsic characteristic of network interfaces. Additionally, you need a couple of milliseconds (do a ping) just to reach your immediate local router directly connected to your PC, a single hop, not to mention the many hops your data have to cross to reach a remote database server.

Natural queuing and path delays in networks make it extremely hard to encounter two operations writing to the same server in the exact milliseconds, that’s what makes Timestamps good for database keys especially that you’ll never use them standalone for the key, but combine them with other meaningful data and, why not a pseudorandom number.

Also a bonus part, here’s an actual case where meaningful keys where immensely helpful.

IPv4 Depletion Rescue Plan

Several years ago I was recruited to manage a network transformation program for a major telecom operator. This operator entered the local market through the acquisition of five smaller local operators, each of them with its own network and systems.

The operator used to assign public IPv4 addresses to end customers, so we were rapidly faced with the problem of IPv4 depletion and needed to recover more than four million IPv4 addresses quickly (replacing them with private addresses) or face a complete business halt. The problem was compounded by another rapidly advancing project to deploy FTTH capturing new customers at a rapid rate and pushed also by planned DSL obsolescence so we needed to move all our DSL customers to Fiber also rapidly. Each new Fiber connection consumed three public IPv4 addresses, while liberation of addresses used by DSL could not be done at the same speed. You have to phase out a complete NAS to recover its subnets.

Additionally, there were numerous small projects running everywhere that were not consuming new addresses but moving them continuously from one place to another. Business requirements made it not possible to impose a network freeze for more than three days, so you always have to be acting fast.

Management brought in one of the big consultancy firms to help. Their findings that we needed to have a huge database tracking the locations of allocated addresses and changes through time and use projections to predict where we need to act first to have first gains and what subnets we can recover, where and when to impose network freezes in a way that do not impact business.

They told us we need to install our own SQL Server, that they’ll be managing for us, and they would be providing the network probes that would feed the SQL database. The problem is their planning was not the best, we needed to act faster, and the cost they asked for was crazily exorbitant! I remember the CTO calling me in his office and telling me: “you have to make do with what you have”.

We had our own in-house-produced network probes, too many of them but unfortunately non redundant. These were not designed to provide input for a database, and across the engineering teams we had little database expertise. We also couldn’t afford waiting for the consultants to develop new probes training them in the process, just for the purpose of performing a network audit; that would’ve bee reinventing the wheel. We needed to act fast and produce reliable result.

Eventually, we decided to make use of our own many-but-reliable probes and create a custom database to combine their output into meaningful information. This is what we did. We wrote intermediate scripts to feed a database that has to be as simple as possible (due to cost and also scarce db expertise), so that we know how easily extract useful information from it.

We ended having a single SQLite file to control the whole IPv4 space (public and private) across the five networks. Each running project had a table or more in that database.

Our Key format was a MAJOR factor in the simplicity of this database, and the ease with which we can write to and extract data from. We opted for meaningful keys combining:

- IPv4 address

- Current Interface MAC address (indicating current location)

- Routing protocol (BGP) information

- Date of first activation on current interface

I’m sure that the consultancy firm presented to us the best Industry Standards and Best Practices. But had we not invented the wheel we wouldn’t have been able to deliver within the time and budget constraints we had.

P.S. A couple of years later, our reinvented-wheel SQLite database, was the corner stone in IPv6 Transformation program, that I’ve been privileged to lead.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Actually, I believe we should, when there’s a foreseen opportunity for improvement, we should,

Totally agree but notice i said “THIS wheel”. This is not a case where your advice will improve things. all of your suggestions have already been tried.

So, instead of having a key such as

8870ba24, you can haveUSB-FM-128G-8870ba24.

You will forever be substringing the key to extract the meaningful part out.

The other components of the key would ensure its uniqueness and you can still attach a UniqueID() to the key.

If the “other parts” ensure uniqueness then there is absolutely no reason to add anything else to the key.

Also a bonus part, here’s an actual case where meaningful keys where immensely helpful.

Keep in mind that “other” meaningful columns CAN still contain unique values. Databases frequently have multiple unique value columns for Indexing purposes. I am not saying you can have only 1 unique value column.

The point of dataless keys is preparation for the day when one of your supposedly unique column values can no longer be unique. Think of it as a small bit of insurance against a MOUNTAIN of work. Sure, it costs little bit extra and you may never use it but if you do…it pays off ten-fold!

-

Account

1,676 -

App Management

3,098 -

AppSheet

1 -

Automation

10,319 -

Bug

982 -

Data

9,676 -

Errors

5,732 -

Expressions

11,777 -

General Miscellaneous

1 -

Google Cloud Deploy

1 -

image and text

1 -

Integrations

1,608 -

Intelligence

578 -

Introductions

85 -

Other

2,902 -

Photos

1 -

Resources

537 -

Security

827 -

Templates

1,308 -

Users

1,558 -

UX

9,109

- « Previous

- Next »

| User | Count |

|---|---|

| 39 | |

| 29 | |

| 23 | |

| 23 | |

| 13 |

Twitter

Twitter