- AppSheet

- Release Notes & Announcements

- Announcements

- AppSheet High Sync Latencies Incident July 9 - Jul...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

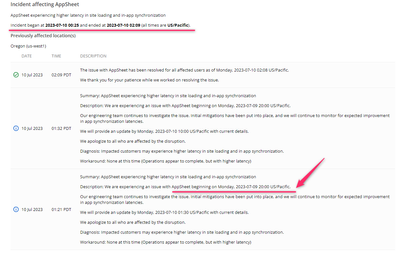

Hello everyone, AppSheet is experiencing a high latency service interruption, which began July 9, 2023, at approx 8:00 PM PT. There was some initial discussion in the thread below, which was originally started for a similar problem on July 4.

https://www.googlecloudcommunity.com/gc/AppSheet-Q-A/Appsheet-is-loading-slowly-today/m-p/610578#M21...

I will post further updates to this thread.

UPDATE 11:46 PM PT - U.S. Pacific Time

Hello everyone. AppSheet continues to experience high sync and homepage load latencies. I recognize this is is a significant impact to your applications and I recognize that it is peak business hours in many affected areas. I apologize for the impact that this has had to your business operations.

We have been investigating this issue for several hours and are actively deploying mitigations to our server infrastructure. These take time to take effect, and our graphs and metrics indicate that the system has not yet recovered.

My self and multiple other infrastructure engineers are actively working on this issue. I am working to get the GCP Cloud Status Dashboard updated to correctly reflect the service interruption.

Although the symptom appears the same as the July 4 incident (which also saw high sync latencies), our initial triage indicates that the underlying root cause is different. While in that case, it was a matter of scaling up additional capacity, in this case, the capacity is there but the server processes themselves are unhealthy. In other words, encountering conditions that prevent them from fulfilling client requests within reasonable latencies.

I understand and validate the frustration felt from a service outage in this regard. I've heard the request for a transparent post mortem, and we will work to provide that in the coming days, once we understand the root cause. This includes remediations to prevent a similar occurrence in the future.

I am actively working on the problem here with team members. I will post a follow-up in an hour, at 12:55 AM PT (US Pacific Time).

Mike Procopio

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone,

This past week, our team has spent a lot of time thinking carefully about this service outage. We have met regularly to discuss its root cause and related factors as we drafted the post mortem and action items. What follows is a summarized version of our internal post mortem to share with our user community.

Summary

From approximately 8:00 pm to 1:50 am Pacific time on July 10th, users served by the us-west1 region experienced issues syncing app and data changes between AppSheet servers and clients. This resulted in significant synchronization slowness or timeouts. Since the incident occurred at a time when AppSheet usage in the US is low, it mostly affected AppSheet users in the APAC region, which connects to us-west1 as the closest AppSheet footprint.

Root cause and trigger

Rapid traffic growth to the us-west1 region combined with a pre-existing design fault in the cache client caused us to exceed the connection limit for the region-specific cache. This caused sync requests in us-west1 to fall back to the global cache. The unexpected growth in additional traffic to the global cache created lock contention and resulted in unusually long sync delays.

Mitigation and prevention

The initial mitigation was to increase cache capacity in us-west1, but this failed due to systems issues during resizing. Instead, we moved traffic away from us-west1 to healthy regions. This mitigated the sync performance issues. Afterwards, we resized us-west1, established system health, and shifted traffic back.

In addition to the aforementioned mitigations, we are also investing in the following preventative changes:

- Improvements to our global locking strategy and implementations (e.g., to avoid contentions)

- Increasing our monitoring across this functional pathway to more systematically detect/prevent this and similar situations

- Updating our internal playbooks accordingly, especially for multiple fallbacks when a system is unresponsive

We recognize that this service outage caused significant disruptions to your applications and business processes. We apologize for this, and are committed to increasing the rigor of our systems and processes to prevent these types of outages going forward.

Mike and Peter (@Peter-Google)

On behalf of the broader AppSheet team

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank for you feedback. Please help us fix this problem asap.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We need an update and a fix on this asap. Thank you!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Waiting for the update.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

UPDATE 10-July 12:31 AM PT - U.S. Pacific Time

Continuing to diagnose and mitigate the issue with engineering leads. Internal incident declared in our internal systems for tracking and post-mortem action items.

Actively troubleshooting in videoconference call with engineering now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please help to fix this.. All my apps are opening slowing and timing out. Please please please

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are there any updates if this issue can be resolved within the day? The app behavior seems to be not improving.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any update?Can it be fixed within few hours?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

UPDATE 10-July 1:28 AM PT - U.S. Pacific Time

The team has put some initial mitigations in place, and traffic has been rerouted to healthy server instances. Our graphs indicate a recovery in the sync times, approaching nominal levels from before the incident recovered.

Can you confirm on your side that latencies have improved for your apps?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

works fine on my end now. thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, Mike

According to my assessment, the performance is currently around 70% compared to my usual usage.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The app seems to be working and return to normal state. Thanks @Mike_Procopio .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's working fine now thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you everyone. We have concluded our emergency mitigation measures on this issue.

Again, I apologize for the service interruption and I recognize it had a significant impact on your applications.

In the coming days, we will continue to monitor our systems to ensure a full recovery, and work to finalize and test our hypotheses on root cause.

We will report back findings to the community, as well as action items on our side to prevent this in the future.

Mike

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your support.

It seems to be that Appsheet working fine right now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Mike_Procopio

I'm sure this is due to the Service Health specification, but the fault start and end times are clearly wrong.

https://status.cloud.google.com/incidents/bCk9RUNbaekMsKDQF9ZT

To begin with, how can the incident start at 2023-07-10 00:25 when it is reported in the summary as starting at 2023-07-09 20:00?

I appreciate that you have uploaded the Incident summary, but please examine the content carefully.

This is increasingly discrediting your customers.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi. I think the server is still slow.

It takes 2~3 times more to load appsheet pages & sync apps.

Also, Google Maps is showing errors as below.

I think it still has lots of problems here in South Korea.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hope you make your promise.

AppSheet is business application and running here and there globally. This is not a one of the cases where ALL the users globally affected (not able to use) the apps. The problem is this is not a single case, as we see quite often. Without me saying, you know the app is used in business daily, or moreover, hourrly and minutely. There will be a loss in the client (app users) while the apps are down. I will not say this if this is or was a single occassion, but this happens quite often, and we suspect it may happen even tomorrow. If the users have this doubt, then they will naturally leave from this platform.

I m not sure how AppSheet / Google management team take this seriously. Once the issue comes up, we explore to find a way how to wake up personnels in Seattle to fix issue.

Based on my past experinces for the past years, AppSheet devs team release new code at the mid night in Seattle time. then like us living in Asian pacific, NZ, AZ, Japan, Korea and other users in this resion is initially affected. At that time, you guys are on the bed.

Please review the process when you push the new code to the platform. Why we, who lives in Asian pacific, is always to be victems of your new codes?

we speak to local Google rep, however, they hvae no idea, which is understandable.

All in all, we AppSheet customers, will have no choice, but we need to cease our business, As I implied, this is a breach of SAL. I clamed numbers of the time, but no reaction from Google management.

This is a risk of Google/AppSheet as platform. Once the platform lose the suppose from the users, then the end of the time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Koichi_Tsuji Our Asian friends take the first hit, and almost always the impact extends well into European business hours.

@Mike_Procopio I heartily echo Koichi's comments and also @Rifad 's about customer concerns and confidence in the platform and the need to explore other possibilities. I started to hear this more frequently now, ever since the problem in April where all Automation was brought down due to a change introduced by AppSheet/Google on the night of Thursday to Friday. Worse, the problem was solved only one full week after. The apps were NOT usable on Friday and I had to spent the weekend working day and night moving large automations to local actions so that customers can work again on Monday.

During this incident we asked your teams to follow the industry's established practices in change management, that are mainly:

- Changes to be announced ahead of time to the community.

- Changes to be performed only during Low Traffic Period, NOT in the middle of the week.

- You should announce the Start and End of the change so that we have the opportunity to check and give you feedback.

- You should provide a grace period where you are ready to do a fallback should you receive feedback from the community that our apps stopped working.

- We should have meaningful reporting just to be able to communicate something to our end customers.

Also, kindly take note that support tickets are just useless and compound the problem by the huge waste of time. Please do NOT tell us to open ticket, UNLESS you provide a way to contact support EXPLICITLY for reporting a Service Interruption. I do NOT need support, I just need to help you by reporting a bug/service interruption, so it is meaningless to waste time waiting while the CS agent "investigates" (nothing) then receiving meaningless instructions, then at the end comes the awaited "it will be reviewed by a specialist". Please provide a mean just to report to the "specialist" from the first place.

Thank you for your assistance!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi folks,

There's a lot of thoughts and feedback in this thread, which I want to address more directly, separate and distinct from the outage in question. I also want to begin by saying that the frustration everyone is sharing is completely valid, our customers depend on a reliable and available platform, and while no SaaS product is immune from production issues, its on us to address the issues rapidly and provide transparency on status and resolution - and we have not lived up to those expectations.

With that said, I wanted to give some broader context and address some of the comments here.

We don't roll out code overnight while we are asleep - we typically begin rollouts during our business day and they complete within a few hours. There might be exceptions when there are issues that require a deployment to resolve, but again - that's to resolve an existing issue. We wouldn't roll out code overnight for the exact reason you describe.

We also do notifications for planned changes - you can look in the community from past times when IP addresses are changing or the rare time recently when we needed to take a planned downtime. In the case of these most recent issues, they were not related to a rollout or a change. We deploy code daily during the day, as I mentioned - we could explore notifications when code is rolled out, but we're moving towards a world where we deploy code more frequently, which makes the notifications less relevant. As we continue to evolve the platform, it becomes increasingly likely that we will start breaking up deployments into components, so that we can deploy different pieces of the platform as needed.

In terms of scheduling, given the scope of our audience, it's not practical to try to optimize scheduling code rollouts for traffic low points - if we did that, we would deploy code in the middle of the night, which would impact Koichi and our other Japanese users (and we would be asleep when it rolled out). We also can't wait for the weekend, because our retail customers are often busiest during the weekend.

The answer, unfortunately, is not to release code less frequently - the data is very clear across google and the industry that the longer the release cycle, the higher the volume of bugs and production issues, because you're changing more things at the same time.

Again, this doesn't change the fundamental point that these production issues are incredibly disruptive, and even more so when we don't communicate effectively status, resolution, and root cause. That is an area where we need to do better.

The automation issue you referred to ended up being a combination of factors, primarily around miscommunication between eng, support, and product. We've taken a number of actions to address that- added more ways for support to clearly articulate customer impact, revisited how we decide whether to rollback, and are more closely monitoring the community.

We will follow up with a root cause, and are actively discussing changes that we will be making to continue to iterate and improve our communication, our reliability, and our support.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the background explanation.

But @zito , that explanation is not fair.

It doesn't explain why the AppSheet release is causing problems in the Paid environment.

Wasn't AppSheet supposed to be released in the Free environment first and then released in the Paid environment after getting user feedback?

As I pointed out before, those who know and use AppSheet well are now the first victims.

Sure, a pre-release to Free users may have been implemented, but I don't see any indication that feedback is being collected.

If that is no longer possible, then please be honest and publicize it.

That would be very important information for end users who are not sure whether to adopt AppSheet or not.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the response - I can clarify a few points:

@takuya_miyai wrote:

It doesn't explain why the AppSheet release is causing problems in the Paid environment.

To be specific, this most recent issue is not tied to a release, as far as I'm aware, unless there was a latent issue that was introduced in an earlier release.

If you're referring to the automation issue that is being discussed, I think it's important to be clear about how we roll out changes.

To start - bugfixes and minor changes are rolled out to everyone at once - this is standard industry-wide, as if you're facing a bug, you probably don't want to hear that you have to wait 3+ weeks for the fix to roll out first to free users, then to paid users.

For user-visible changes, we roll those out to free users first, followed by paid users. That might mean we deploy new code that is everywhere, but does not get executed by paid users, because the interface/frontend doesn't execute that codepath for paid users.

Beyond that, we roll features out to the team first, then google, then start rolling out to customers, potentially with preview stages that are opt-in.

In the case of the automation issue, the problem was a regression that was introduced as part of a minor change or bugfix, if I remember correctly. The failure was on our not identifying the scope of the issue quickly so we could revert the change.

To be clear, we absolutely do get feedback and metrics from free users with new features, and we take that into account (along with Googlers and private preview users). But free users don't use the product in the same way as paid users, so its not a perfect solution for minimizing risk, which is why we have preview stages as well.

In the end, everything we do is about minimizing risk - we limit rollouts as much as possible, we have monitors and automated testing, we have googlers try features first, etc.

But to emphasize, the issue that started this thread was not connected to a release or rollout process (as far as I am aware at this point in time). Regardless of our rollout process, this issue would have manifested itself, and require the same conversation we are having now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I understand that you and your team are working hard to minimize the impact on our users.

However, the result of this situation is the outage in the last week and the continuing bugs that have been affecting the production environment this year.

This may require Google to fundamentally change its thinking and processes.

Specifically, I believe the pre-evaluation process you described needs to be improved.

As you yourself have pointed out, free users and paid users use AppSheet differently. Similarly, do the Googlers you are asking to evaluate spend the same amount of time on AppSheet production apps as the paid users?

If you want to gather feedback, you should ask App Creators who are developing apps on a daily basis to evaluate them.

From there you will get voices that you guys don't want to face and that will generate tedious work.

But that is true feedback.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @zito for taking the time to provide this response, truly appreciated. I also value your acknowledgement of weaknesses to be improved and your transparency in communicating them.

From my side, I do understand and acknowledge the difficulties you mentioned regarding choosing a Low Traffic Period and avoiding a long release cycle. For this and for the other aspects discussed, I would like to make the following additional suggestions:

- As @takuya_miyai noted, adopting a phased deployment first to free accounts, then to paid plans is very important. This is supposed to be an already-followed practice, so please stick to it.

- Google Cloud has a global infrastructure. Now being part of the Google Cloud solution, it might be high time for AppSheet to benefit from this global reach and adapt a distributed APAC-EMEA-NCSA server presence. This will make it much easier to both:

a. Manage changes in regional low traffic periods.

b. And provide you greater versatility to reroute traffic in case of impact to other regions with simple routing/dns changes.

- I understand the importance of logging support tickets, but please please please provide us a process to report service interruptions, instead of going through the useless usual support channel. It is wasteful, frustrating and utterly futile while your app is down, to have an agent asking you what changes have you introduced in the app, asks for access to investigate trivialities, then responds with nonsense, then you have to tell him this is nonsense, so at the end he could report to the "specialist". Then this "specialist" never tells you what happened from the first place, and we come here for answers and true guidance. So what's the point? They might be providing good value at guiding new users who need help setting up their apps, but this is NOT what we need. So please please provide an appropriate alternative.

Thanks again!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@takuya_miyai wrote:

If you want to gather feedback, you should ask App Creators who are developing apps on a daily basis to evaluate them.

From there you will get voices that you guys don't want to face and that will generate tedious work.But that is true feedback.

💯

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Joseph_Seddik wrote:

It is wasteful, frustrating and utterly futile while your app is down, to have an agent asking you what changes have you introduced in the app, asks for access to investigate trivialities, then responds with nonsense, then you have to tell him this is nonsense, so at the end he could report to the "specialist". Then this "specialist" never tells you what happened from the first place, and we come here for answers and true guidance. So what's the point?

Facts !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone,

This past week, our team has spent a lot of time thinking carefully about this service outage. We have met regularly to discuss its root cause and related factors as we drafted the post mortem and action items. What follows is a summarized version of our internal post mortem to share with our user community.

Summary

From approximately 8:00 pm to 1:50 am Pacific time on July 10th, users served by the us-west1 region experienced issues syncing app and data changes between AppSheet servers and clients. This resulted in significant synchronization slowness or timeouts. Since the incident occurred at a time when AppSheet usage in the US is low, it mostly affected AppSheet users in the APAC region, which connects to us-west1 as the closest AppSheet footprint.

Root cause and trigger

Rapid traffic growth to the us-west1 region combined with a pre-existing design fault in the cache client caused us to exceed the connection limit for the region-specific cache. This caused sync requests in us-west1 to fall back to the global cache. The unexpected growth in additional traffic to the global cache created lock contention and resulted in unusually long sync delays.

Mitigation and prevention

The initial mitigation was to increase cache capacity in us-west1, but this failed due to systems issues during resizing. Instead, we moved traffic away from us-west1 to healthy regions. This mitigated the sync performance issues. Afterwards, we resized us-west1, established system health, and shifted traffic back.

In addition to the aforementioned mitigations, we are also investing in the following preventative changes:

- Improvements to our global locking strategy and implementations (e.g., to avoid contentions)

- Increasing our monitoring across this functional pathway to more systematically detect/prevent this and similar situations

- Updating our internal playbooks accordingly, especially for multiple fallbacks when a system is unresponsive

We recognize that this service outage caused significant disruptions to your applications and business processes. We apologize for this, and are committed to increasing the rigor of our systems and processes to prevent these types of outages going forward.

Mike and Peter (@Peter-Google)

On behalf of the broader AppSheet team

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Mike_Procopio Thanks Mike! Appreciated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Mike_Procopio

I understood about the causes and remedies on the platform.

What I would like to know additionally are the rules for disclosing future failure responses.

My expectation is that it will be published through the AppSheet section of GCP Service Health.

https://status.cloud.google.com/products/FWjKi5U7KX4FUUPThHAJ/history

AppSheet, like this one, was publicized in the Announcements category of this community.

However, the number of citizen developers who refer to this community on a daily basis is limited.

Also, with the upcoming GREAT update of Core available on many GWS plans, there will be more users who use AppSheet but do not refer to the community.

Therefore, please make sure GCP Service Health is working properly.

Best regards.

@zito @Peter-Google @isdal

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Takuya,

Thank you for the feedback - we began the process of publishing through the GCP service console, but it is a quite onerous process that would have added at least several weeks before we could have published the response. Given the level of interest in this thread in timely feedback, we decided to issue it here.

In the future, we can explore publishing through the GCP console, but issues like this one would likely not rise to the level of a published incident report on that console, and we do not have complete control over what is published there.

Publishing here gives us more control and a shorter timeframe to disclosure, which I think is in the best interest of our users.

We are exploring other options for publishing service health updates that would both be centralized and allow us to respond in a timely fashion.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @zito

I understood that I can be guided here for a while.

As is our location, many of our Japanese users are unfamiliar with this community. However, I feel that this is a point that partners like us can cover. Please let me work with you on the fault notification.

One point of concern is that the most recent outage continued to occur during the late night hours of PST.

I know this is a factor that makes publicity in the community more difficult in this case, but I am hopeful that an announcement will be made as quickly as possible.🙏

As @Mike_Procopio reports, everyone in APAC is working hard and it's during work hours.😃

-

Account

3 -

Announcements

30 -

App Management

8 -

Automation

30 -

Data

31 -

Errors

17 -

Expressions

21 -

Integrations

24 -

Intelligence

5 -

Other

15 -

Resources

15 -

Security

5 -

Templates

13 -

Users

7 -

UX

34

Twitter

Twitter