- Google Cloud Security

- Community Blog

- Implementing a Modern Detection Engineering Workfl...

Implementing a Modern Detection Engineering Workflow (Part 2)

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Welcome to part two of this blog series where I’m sharing my methodology for implementing a modern Detection Engineering capability that uses Detection-as-Code and Chronicle. In part one, I talked about my choice of tooling, setting up a new GitHub repository, utilizing a detection framework, and configuring a detection lab. I also walked through my process for using SSDT to assist with detection development and testing and wrote an initial UDM search query to match on the events related to the attack technique of interest for this project, Kerberoasting. Part two will cover the following:

- Synchronizing rules between Chronicle and GitHub

- Staging the new detection idea

- Writing the rule

- Testing & validating the new rule

Syncing Rules Between Chronicle and GitHub

Let’s move on to getting our detections synced between Chronicle and the GitHub repository and creating a GitHub issue to track the development of the new detection.

Before we develop any new detections, let’s use the rule manager tool to pull all of our rules that currently exist in Chronicle and commit them to the GitHub repository. We can do this by executing the following commands.

# Create virtual environment using Python 3.10 or later

python -m venv ./detection

source detection/bin/activate

pip install -r requirements.txt

# Retrieve all rules from Chronicle and write them to local files

cd rule_manager/

python -m rule_cli pull-latest-rules --skip-archived

# Commit rules to main branch of GitHub repo

git add rules rule_config.yaml

git commit -m "initial commit for current rules"

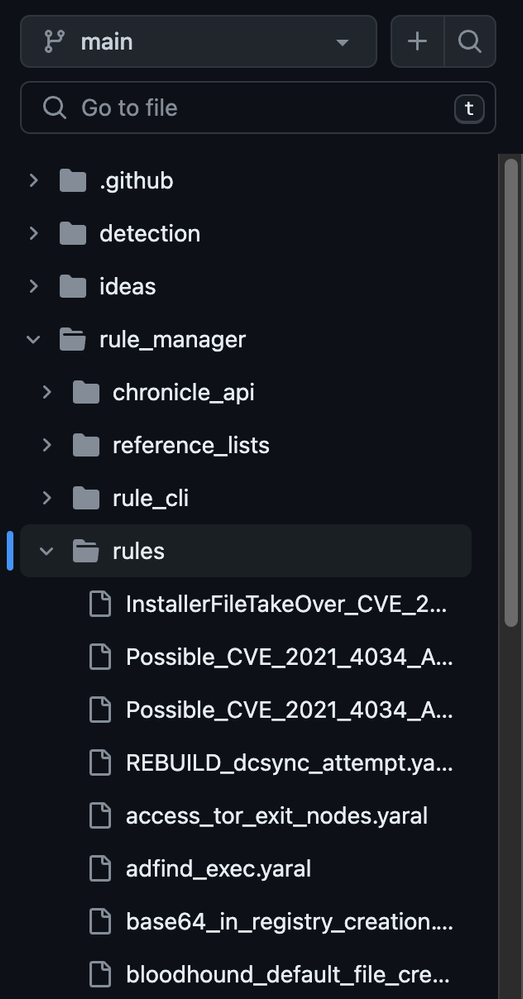

Once the above commands are executed, the rules directory in the main branch of your project will contain your YARA-L rules. The metadata and configuration for each rule is written to a single rule_config.yaml file, which we will edit later when creating a new rule.

The rule manager tool can also be used to manage reference lists in Chronicle and update rules in Chronicle based on the detection rules in your GitHub repo, which we will see later.

Staging a New Detection Idea

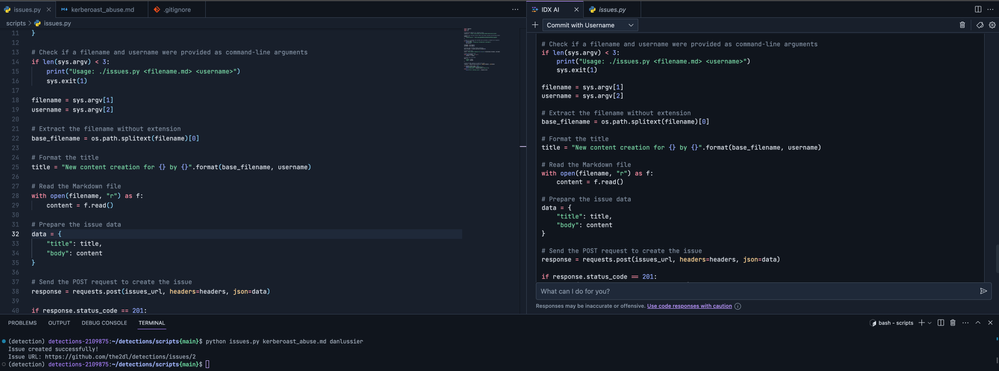

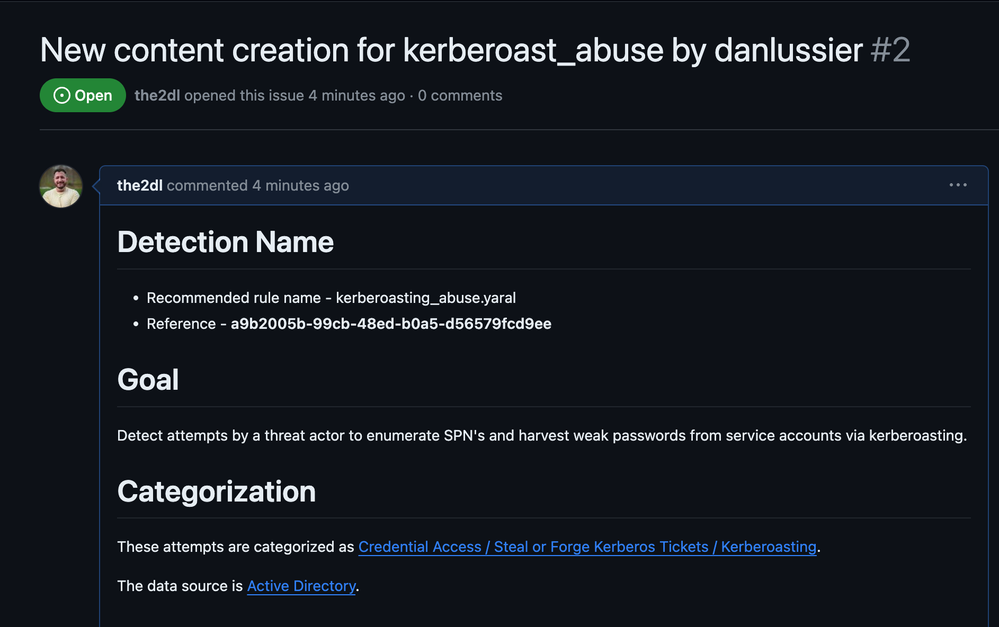

It’s now time to submit a GitHub issue to track and propose the new detection that we want to implement. To do this, I’ll utilize my issue creator script. As a side-bar, I’m using Project IDX for this. I needed to make some slight adjustments to the code to harvest a username and associate it to the title of the issue. I asked the IDE to update my current script – it created a new version with the fix in less than a second.

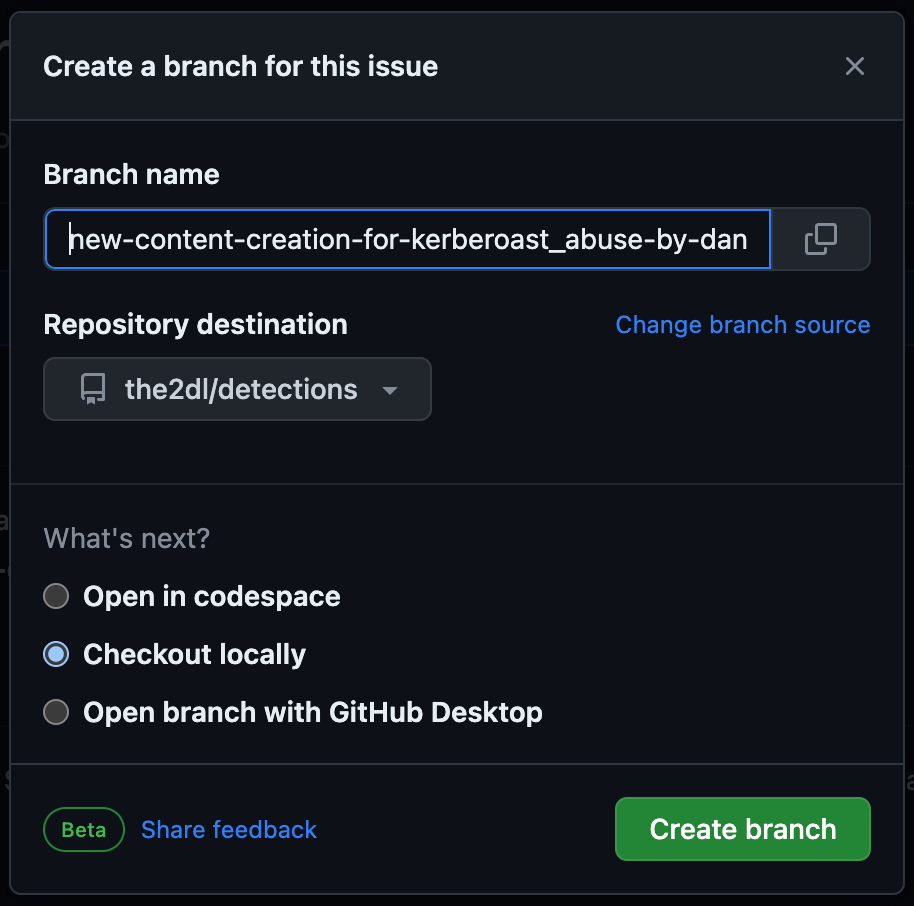

As a Detection Engineer, the next step is to share the proposed detection with my peers and request feedback on the idea and detection logic. To do this, I created a new branch in the GitHub repo from the issue that was just created. These proposed changes can be merged into the main branch of the project at a later stage once peer review & approval is obtained and all tests pass successfully.

I’m going to use Visual Studio Code to checkout the newly created branch and continue developing the new detection rule!

Writing the YARA-L Rule

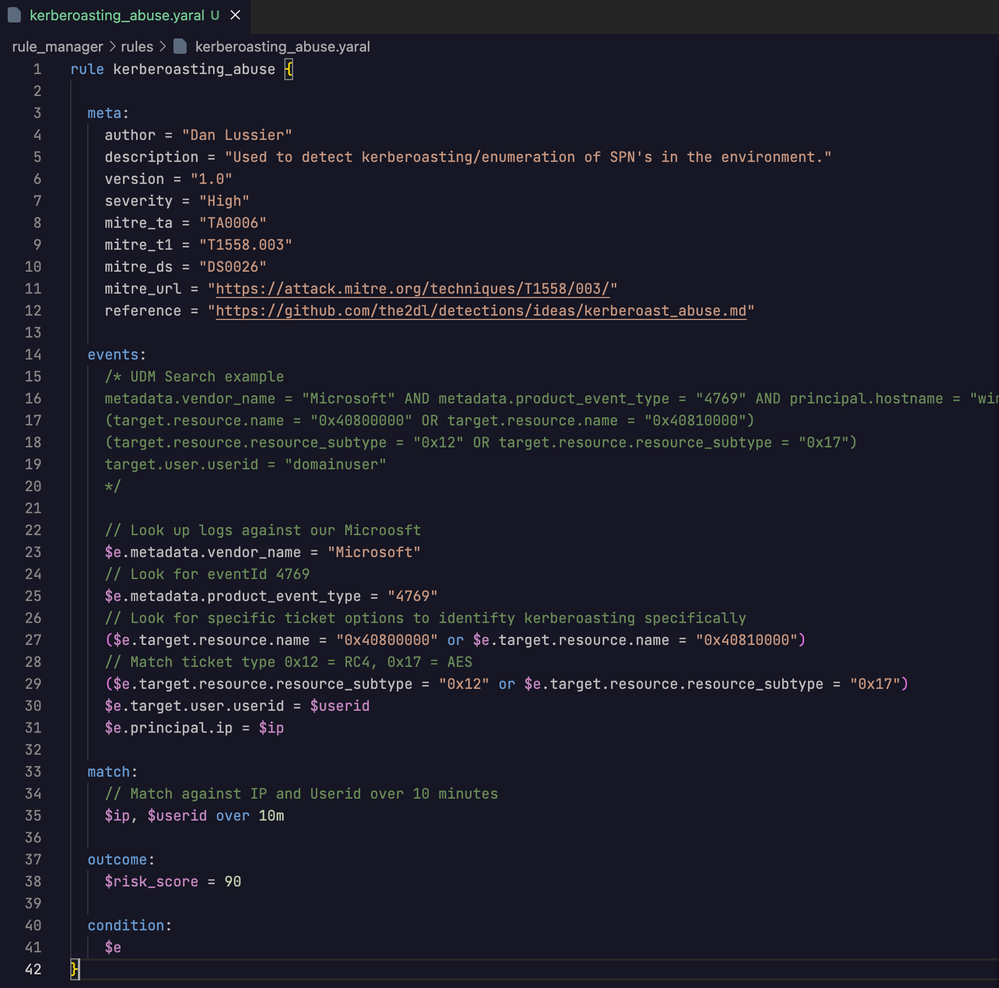

Now it’s time to write the logic for the new YARA-L rule and test it out. We’ll be using the UDM query from the documented detection idea introduced in part one and turning that into a YARA-L rule.

metadata.vendor_name = "Microsoft" AND metadata.product_event_type = "4769" AND principal.hostname = "win-dc.hack.domain"

(target.resource.name = "0x40800000" OR target.resource.name = "0x40810000")

(target.resource.resource_subtype = "0x12" OR target.resource.resource_subtype = "0x17")

I’m going to use the new YARA-L extension for VSCode to assist me with writing my rule. The VSCode extension provides syntax highlighting, bracket matching, and the same color palette that you’re used to seeing in Chronicle’s rules editor in the UI. The extension doesn’t have all the run-time checking that the Chronicle UI does but we’ll show how to validate syntax during this deployment.

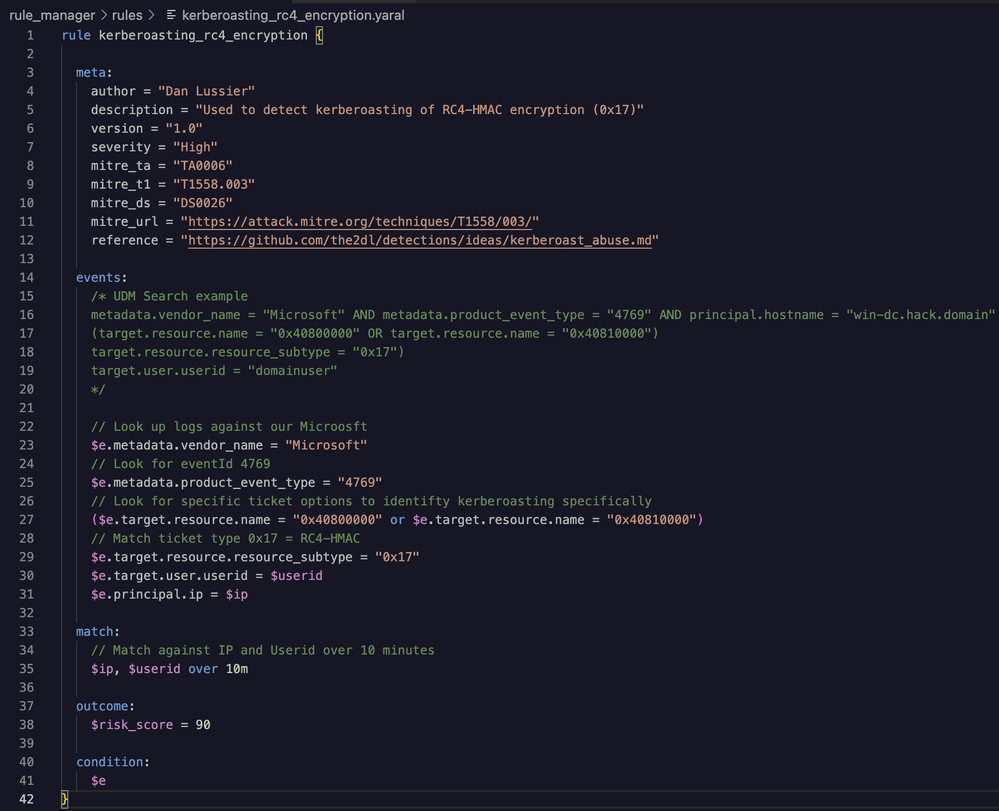

When creating detections, it’s important to have a repeatable “meta” section for your YARA-L rules. Mine is very basic, but includes fields that are important to my workflow, including the reference document we worked on earlier to refer to as required.

YARA-L Syntax Validation & Detection Testing

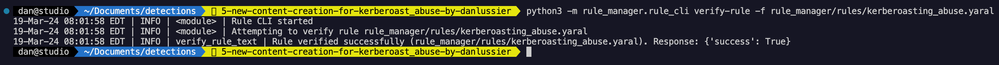

Now that the first version of our rule is written, it’s time to validate the rule’s syntax and test that it matches on the intended events. Chronicle’s rules editor in the UI validates the syntax of YARA-L rules as they’re being worked on, but for this project, we’re going to validate the syntax of our new rule using Chronicle’s REST API and the rule manager tool.

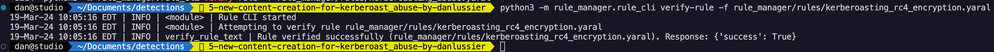

In the image below, you can see that the syntax of my YARA-L rule was verified successfully.

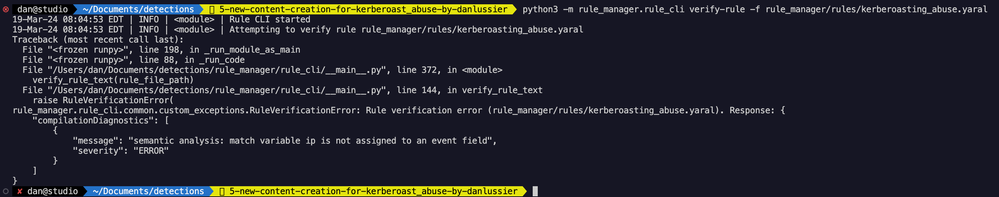

The image below shows what happens when rule verification fails for a YARA-L rule. In this example, I added a bunch of random characters at the end of line 31 of the rule.

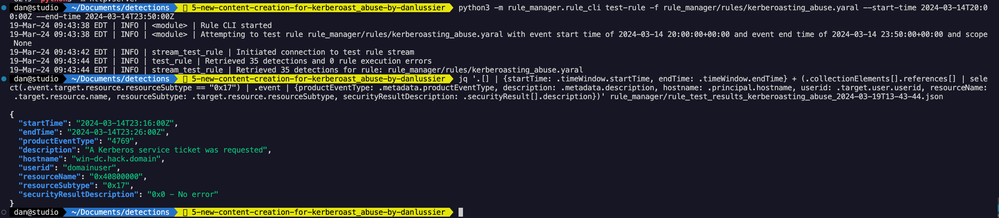

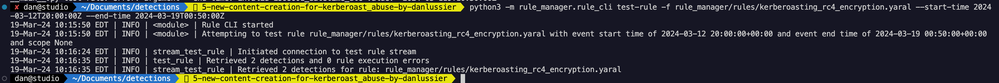

Now that we’ve confirmed the syntax of our rule is valid, we need to test the rule against the security events that are ingested in Chronicle and gain an understanding of its fidelity. I’m going to use the rule manager tool’s “test-rule” command to accomplish this. Detections that are returned from the rule during testing are written to a local JSON file for analysis.

Note, it’s possible to test rules using Chronicle’s rules editor in the user interface. For this project, I’m demonstrating an alternative method for testing rules via Chronicle’s REST API.

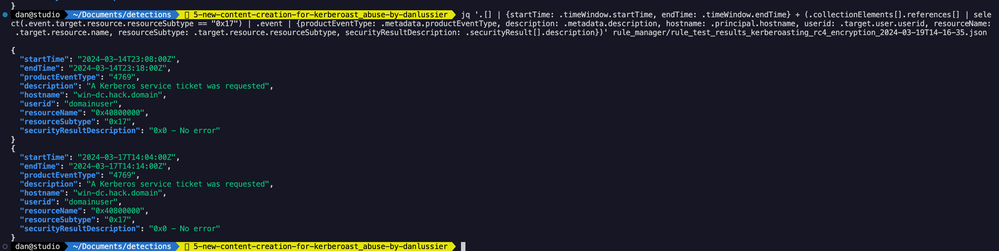

One of the things I’m looking for in this detection is the 0x17 (RC4-HMAC) encryption type as that represents a weaker hash (Kerberos 5 TGS - KRB5TGS) that would be easier for an attacker to crack, and likely far rarer in the telemetry. In my small lab environment, if I look over a short window of time around when I ran the Kerberoast testing, I can see that the rule returns 35 detections when looking at both 0x12 and 0x17 encryption types. I decided to utilize jq to filter on 0x17 and increase the rule’s fidelity.

Here’s that jq command for future reference. You can modify this to work with many different detections you’re building. Simply adjust the fields you want returned from the json document.

jq '.[] | {startTime: .timeWindow.startTime, endTime: .timeWindow.endTime} + (.collectionElements[].references[] | select(.event.target.resource.resourceSubtype == "0x17") | .event | {productEventType: .metadata.productEventType, description: .metadata.description, hostname: .principal.hostname, userid: .target.user.userid, resourceName: .target.resource.name, resourceSubtype: .target.resource.resourceSubtype, securityResultDescription: .securityResult[].description})' youroutput.json

From the filtered results, we can see targeting 0x12 is going to be a little more verbose and require quite a bit more tuning. Of the 35 total events in my search window, 1 was 0x17, 34 were 0x12.

Because of this, we’re going to make some adjustments to the YARA-L rule to target only 0x17, and rename the detection to specify that it’s looking for the abuse of weak hashed passwords. You could also trigger an additional workflow for another detection to investigate abuse of 0x12 (AES) based encryption which will require quite a bit more tuning.

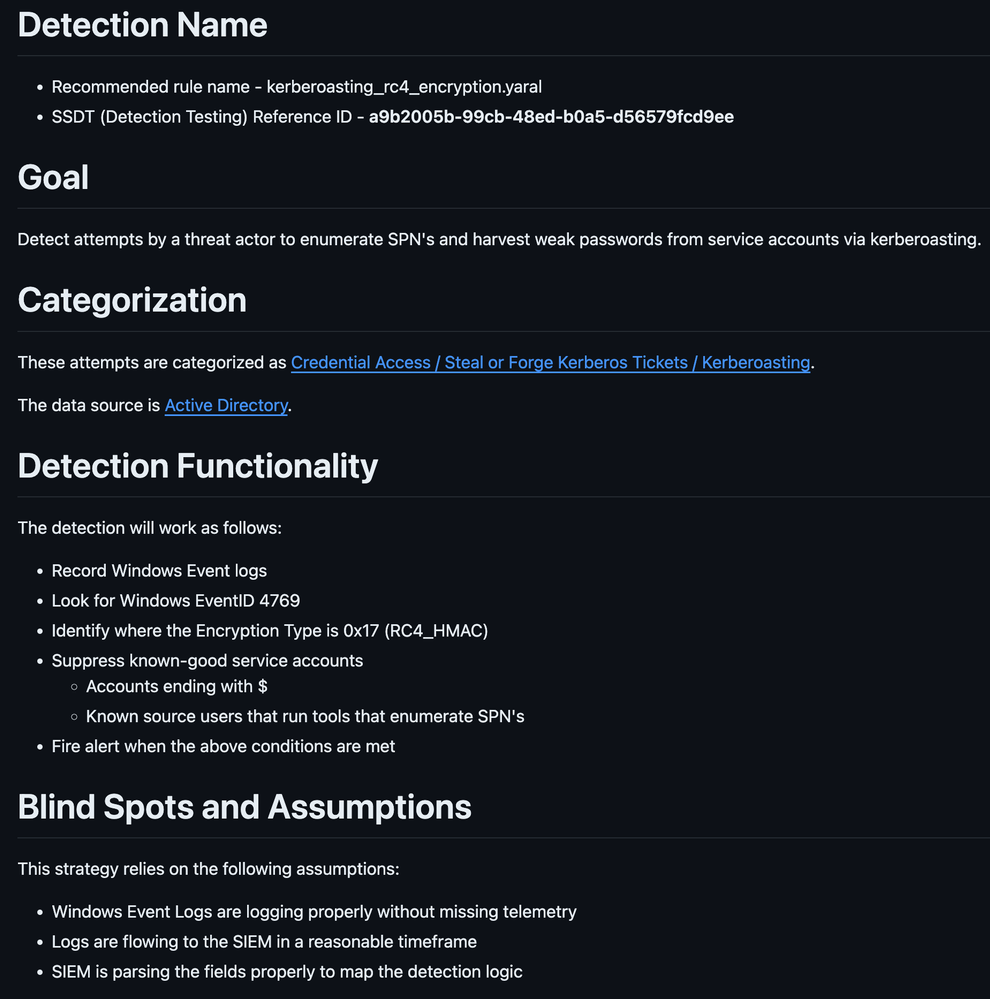

The updated detection idea is shown in the image below and can be found on GitHub. The rule name has been changed to kerberoasting_rc4_encryption.md and references to 0x12 (AES) have been removed.

The updated version of the YARA-L rule is shown below to reflect the updates to our detection idea and methodology.

The terminal output above shows that the syntax of the rule is valid after it was modified. Let’s test the rule again to check how many detections are returned. To make sure this detection is high fidelity, we’ll run one more test over a two week window and verify there aren’t a ton of false positives.

Validating the results shows two detections, the second one was generated by additional testing of the Kerberoasting technique.

Before we move on to creating a pull request in GitHub that contains our proposed changes, let’s talk about how the rule config (rule_config.yaml) file works. The rule_config.yaml file contains an entry for each YARA-L rule that’s in the rules directory along with the configuration and metadata for each rule.

I want the new Kerberoasting rule to be enabled when it’s deployed to Chronicle, but I don’t want it to generate alerts at this time. To accomplish this, I create a new entry for the rule in the config file as follows and set the enabled option to true and the alerting option to false.

kerberoasting_rc4_encryption.yaral:

enabled: true

alerting: false

When this rule is deployed, it will be “live enabled” and generate “detections” in Chronicle for the security team to review as needed. The rule will not generate “alerts” for our security team to triage. The reason I’m doing this is so that I can monitor any detections for the rule and apply any tuning to it for a period of 7-14 days. After that validation period has passed, the team can make the decision whether to enable alerting for the rule or not.

Wrap up

That’s it for part two where I demonstrated how to do the following:

- Synchronize rules between Chronicle and GitHub using a GitHub Actions workflow

- Staging a new detection idea

- Writing a YARA-L rule

- Testing & validating a rule

As a reminder, you can find links to the tools and code I’m using for this project here. You can also find additional awesome code samples in the Chronicle GitHub organization (including sample rules).

Join me in part three where I’ll show you how a team of Detection Engineers can propose changes to detection content, deploy rule updates to Chronicle, re-trigger events in SSDT for final validation, and tune rules with reference lists.

Twitter

Twitter