- AppSheet

- AppSheet Forum

- AppSheet Q&A

- Best Practice for Data Recovery from "Recovery Mod...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I recently had a user of an app manage to accrue 444 pending syncs on their app that had recently had a data schema change.

In attempt to help this user not have to re-enter nearly 12 hours of work, I moved the app into “Recovery Mode”. According to the documentation, this should ignore sync errors for the user and send all pending data in a Recovery.txt file to the app owners file storage.

Recovery mode did not allow the user’s app to sync, so we went the route of “Reset Changes” which also creates a recovery file. However, in this case, it created 444 recovery files!! Each one containing the data for a single sync operation.

The files are in JSON, which can be converted more or less easily to CSV / tabular format, or moved to the SQL database via appscript or something. But what I’m stuck on is how to process 444 individual files.

Anyone have any creative ideas to collate and parse the files? Maybe a Python boilerplate I can tinker with?

@Bellave_Jayaram @MultiTech_Visions @Grant_Stead @Jonathon @Derek @QREW_Ben @CJ_QREW

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For the second half of the fix, I wrote a couple of Javascript functions to parse the JSON data in the collated file and extract only the row data for each table that had sync data. I ran these functions in the Chrome browser console (because you can execute JS from there) and then copied the console log data into json2table.com to get it in tabular format. Then I pasted them into a spreadsheet to then clean for entry into the DB.

Interesting note: It turns out there were only 200 files (200 rows) in the Recovery File, not 444. I wonder if AppSheet caps the Recovery Data process at 200 sync items?

@praveen are you aware of any limit on the recovery file creation?

I suppose I could have written a few more lines of JS to just generate the html table, or even just run the whole bit in AppScript and used JDBC to go right into the DB… Next time ![]()

Here’s the code for anyone who has the same issue.

function getTables (data) {

var tableNames = [];

for (i = 0; i < data.length; i++) {

tableNames.push(data[i].tableName);

};

var distictTableNames = [...new Set(tableNames)];

console.log(distictTableNames);

return distictTableNames;

};

function extractRows (data) {

var tables = getTables(data);

var numTables = tables.length;

var betterData = [];

for (j = 0; j < numTables; j++) {

console.log("entering for loop")

var thisTable = tables[j];

console.log("looping through this tables rows: "+thisTable );

var filterData = data.filter(table => table.tableName === thisTable);

betterData.push([]);

console.log(`${thisTable} has ${filterData.length} rows`);

for (i = 0; i < filterData.length; i++) {

betterData[j].push(filterData[i].row);

};

console.log(JSON.stringify(betterData[j]));

};

console.log(`Looped through data and extracted rows for ${numTables}: ${tables}`);

return true;

};- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If this is an isolated incident, one quick way of handling it would be to run a VBA script to merge all .csv files in a folder into one workbook with many sheets.

From there its pretty easy to bash all of those sheets together into a single master sheet by using INDIRECT() and ROW() formulas to select the cells from each unique sheet.

Janky, but fast and effective.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you give an example of the data structure in these files?

Creating a quick script, or some spreadsheet finagling would probably get you what you need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Marc_Dillon Here’s an example (with some client data removed)

{

“tableName”: “VenueSpace”,

“row”: {

“_RowNumber”: “1167”,

“ID”: “MGM474”,

“CompanyID”: “UPINPX6B”,

“VenueSpaceTypeID”: “3F0D3F00”,

“Designation”: “Meeting Room”,

“RoomName”: “Room 301”,

“VenueMapLocation”: “”,

“FloorLevel”: “3”,

“UnitOfMeasurement”: “Feet”,

“DimensionLength”: “38.67’”,

“DimensionWidth”: “28.83’”,

“CeilingHeight”: “11’-14’”,

“Chandelier”: “”,

“RiggingHighBeam”: “”,

“RiggingLowBeam”: “”,

“Soffit”: “”,

“TotalSqFt”: “1115”,

“BuiltInAV”: “N”,

“AVNotes”: “”,

“BuiltInFurniture”: “”,

“BuiltInFurnitureNotes”: “”,

“SpaceFloorplan”: “”,

“LocationInFloorplan”: “”,

“Notes”: “”,

“CreationDateTime”: “”,

“ModifiedDateTime”: “03/26/2020 18:25:33”,

“Related Venue Space Subs”: “”,

“Related Venue Space Capacities”: “91693 , 91694 , 91695 , 91696 , 91697 , 91698 , 91699 , 91700 , 91701”,

“Computed Dimensions”: "38.67’ L X 28.83’ W ",

“BuiltInAV_calc”: “No”,

“Related Venue Links”: “”,

“Related Venue Images”: “”,

“Related Venue Infos”: “”

},

“appVersion”: “1.000677”,

“userSettings”: {

“_RowNumber”: “0”,

“_EMAIL”: “”,

“_NAME”: “”,

“_LOCATION”: “”,

“Options Heading”: “”,

“Role”: “Super”,

“Option 2”: “”,

“Country Option”: “”,

“Language Option”: “”,

“Option 5”: “”,

“Option 6”: “”,

“Option 7”: “”,

“Option 8”: “”,

“Option 9”: “”,

“_THISUSER”: “onlyvalue”

},

“mechanism”: “Form”,

“viewName”: “Venue Space Form”,

“type”: “UpdateTableRow”,

“actionType”: “UpdateTableRow”,

“failureCount”: 0,

“requestId”: 57856484,

“timestamp”: “2020-03-27T01:25:47.79Z”,

“timeStamp”: “2020-03-27T01:25:47.79Z”

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are these to be synced to a single table? Are the values to be synced to the same columns in every JSON file (in other words, does each JSON file have the same number and named columns to be synced)?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I’m assuming yes.

424 are from one table. Roughly 20 are from another table.

To Jonathans point, that would be pretty effective but the files are all in JSON format, not yet CSV. There are easy tools to parse a JSON file into CSV, but I’d still have to collate the files.

I’m mostly stuck on Txt file collation

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I got the first part figured out!

I joined them into one file with a Command Prompt command.

Now to parse the 13,000 line JSON file ![]()

Thanks for the suggestions, everyone!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hahaha!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For the second half of the fix, I wrote a couple of Javascript functions to parse the JSON data in the collated file and extract only the row data for each table that had sync data. I ran these functions in the Chrome browser console (because you can execute JS from there) and then copied the console log data into json2table.com to get it in tabular format. Then I pasted them into a spreadsheet to then clean for entry into the DB.

Interesting note: It turns out there were only 200 files (200 rows) in the Recovery File, not 444. I wonder if AppSheet caps the Recovery Data process at 200 sync items?

@praveen are you aware of any limit on the recovery file creation?

I suppose I could have written a few more lines of JS to just generate the html table, or even just run the whole bit in AppScript and used JDBC to go right into the DB… Next time ![]()

Here’s the code for anyone who has the same issue.

function getTables (data) {

var tableNames = [];

for (i = 0; i < data.length; i++) {

tableNames.push(data[i].tableName);

};

var distictTableNames = [...new Set(tableNames)];

console.log(distictTableNames);

return distictTableNames;

};

function extractRows (data) {

var tables = getTables(data);

var numTables = tables.length;

var betterData = [];

for (j = 0; j < numTables; j++) {

console.log("entering for loop")

var thisTable = tables[j];

console.log("looping through this tables rows: "+thisTable );

var filterData = data.filter(table => table.tableName === thisTable);

betterData.push([]);

console.log(`${thisTable} has ${filterData.length} rows`);

for (i = 0; i < filterData.length; i++) {

betterData[j].push(filterData[i].row);

};

console.log(JSON.stringify(betterData[j]));

};

console.log(`Looped through data and extracted rows for ${numTables}: ${tables}`);

return true;

};- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Better data ![]()

![]()

Kinda seems like you have just established the best practices my friend.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is lame… Like it would be cool if we had some appsheet developer tools to be able to handle this recovery… ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Recovery used to be epic. You’d turn on recover mode and the system would spit the records into the data source - it was up to you (the developer) to be there to catch and fix the records as they came in.

Then it turned into a nightmare. So much so that when a client and I faced trying to recover a couple hundred (not even 400 so Kudos to @Stefan_Quartemont), after spending an hour trying to find a smooth way to recover the records we abandoned it and had people re-enter things. ![]()

There needs to be a better way.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Grant_Stead, @MultiTech_Visions - I finished a prototype node.js app that collates recovery files and scans the structures, builds a temporary table schema in a DB and generates insert statements for each row in each table and send them those temp tables.

I think this could become a web service: zipped folder of recovery data in >> CSV data out. then you can load that in your datasource how you like.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And apparently there is a “new” action that allows the user to import via CSV… maybe the whole recovery process can be kicked to the user to handle…

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

CSV upload into the App Data Sources would be AWESOME. And make this process a little easier perhaps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

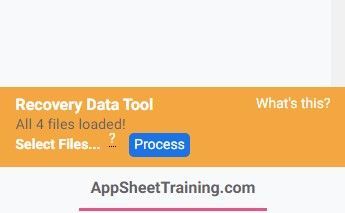

Hi Stefan, thank you for creating this tool. I tried it this weekend but for some reason I can't get the final step to work.

I've successfully uploaded the files, but the "Process" button doesn't become grey and clickable as per step no 7 in your instructions.

Your advice will be much appreciated.

-

Account

1,677 -

App Management

3,099 -

AppSheet

1 -

Automation

10,322 -

Bug

983 -

Data

9,676 -

Errors

5,733 -

Expressions

11,779 -

General Miscellaneous

1 -

Google Cloud Deploy

1 -

image and text

1 -

Integrations

1,610 -

Intelligence

578 -

Introductions

85 -

Other

2,904 -

Photos

1 -

Resources

538 -

Security

827 -

Templates

1,309 -

Users

1,559 -

UX

9,110

- « Previous

- Next »

| User | Count |

|---|---|

| 43 | |

| 28 | |

| 24 | |

| 24 | |

| 13 |

Twitter

Twitter